I’m creating this post as a bit of diary for building an Autonomous Agent that can answer questions using the web. I’m building this agent using TypeScript and my Alpha Wave library and if you want to follow along code wise, you can find the sample here.

So in an effort to accelerate my development, I’ve spent the last day or so adding support for LangChain.js to AlphaWave… It’s actually two-way support. You can pretty much use any LangChain component in an AlphaWave project and I package AlphaWave itself up as a new model that can be used in LangChain. So if you want add validating and self repairing models to your LangChain project you can now do that (for JS at least.)

I also created Command wrappers for all the most interesting LangChain tools so that I could use them from Alpha Wave based Agents. One nice benefit is that I was able to add JSON Schema validation to all of LangChains tools which makes it impossible for a tool to be called with missing parameters. So with all that done… On to my actual goal. Building an agent that can use these new tools to answer the users questions using stuff from the web.

tl;dr; we’re not there yet. I was asking a simple question “number of a plumber near seattle” and I sort of got it to work (and I’ll break that down below) but I had to completely re-write both the Serp and WebBrowser tools to even get it to sort of work… I have a lot of ideas for how to get it there but we’re not there yet.

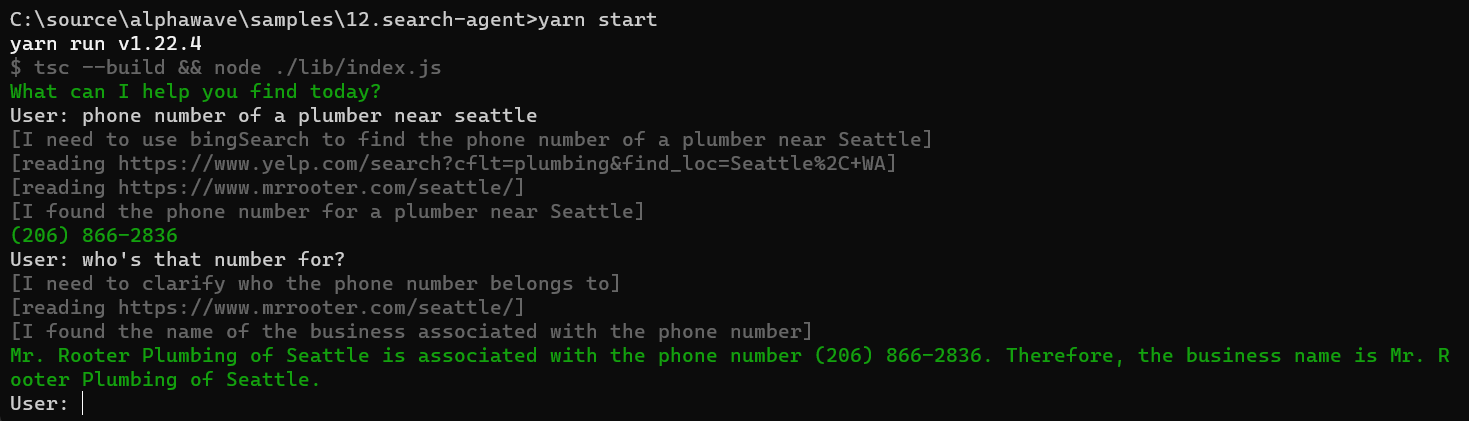

So let me start with a screen shot of my last run of “search-agent” (this is gpt-3.5-turbo by the way):

We can see that it starts off pretty well. It asks what I’m looking for, then uses the bingSearch command to find some pages to read, then it starts using the webBrowser command to start reading the links on the pages (these are the two tools I had to completely re-write) and eventually it finds a phone number on the Better Business Bureau website which already has me suspect. So I follow up asking the name of that plumber and it does what I would have hopped… It looks to be using the webBrowser command to ask a follow-up question of the BBB site and sure enough the phone number was hallucinated. I could turn on more detailed logging to see what’s happening here but I can already tell you that the top level agent essentially felt like it was running out of options to answer the question so it made something up… I could go into much greater depth here but let me just give some initial observations and follow-up approaches I’m planning to try:

Observations:

- If you give an agent both a

Serpand awebBroswercommand it can’t always decide which one it wants to use. There too similar. It will often pick the search tool but it will occasionally jump straight to the browser. including both is a dicey proposition. - When it calls the

Serptool and gets back a list of links it’s somewhat 50/50 as to whether it will do the work to go through all the links… I’ve already seen that asking these things to do anything in a mechanical loop is dicey. Arguably, GPT-3.5 has a pensions for stopping tasks short but you should generally avoid asking them to run in mechanical loops. - If the top level Agent sees the answer it will convey that answer truthfully. But as the task progresses there’s an ever growing risk that it will just make something up. I tried updating the top level agents prompt to better ground it in the truth of the data being returned from the commands but that stopped it from looping

On the plus side… Alpha Waves automatic repair logic is working flawlessly. Every run I did had 1 - 2 bad responses and Alpha Wave repaired every single one. I did notice that the agent would sometimes change its mind about what command it wanted to do next when a repair occurred but it seemed to always change its mind in a more desirable direction. It makes me wonder whether a lot of the response errors the model makes are just because its undecided as to how it should respond or, more likely, it goes down a response branch it starts to regret.

Next Steps for Search Agent:

- I’m planning to to essentially combine the

SerpandWebBrowsercommands into one single command that can do both web search and browse results. This will do 2 things; it will remove some of the confusion for the top level agent as to which tool to chose, and it will remove the need for the agent to run a mechanical loop as the Serp tool can drive that loop via code. - The other issue is the

WebBrowsertool doesn’t go deep enough so I have a bunch of ideas for how to get it to browse a site looking for answers more like a human does.

I’ll report back to this thread as I make progress… I feel like this is solvable but it’s all in how you break the task apart for the agent. It’s going to take a blend of code for the parts that code is good at (loops and such) and LLM magic for the parts its good at, reasoning.