More bad documentation to report. This is about API vision. https://platform.openai.com/docs/guides/images-vision?api-mode=chat

These requirements just seem bizarrely out of touch. Not only are larger images allowed (but face resizing by the API), but certainly text and logos can be sent (or: put it in legal).

I can prove that immediately wrong, as do the examples later in the same page referring to sending images like 4096 x 8192.

This is arrived at with my trying in earnest to make a pricing calculator that aligns with documentation, only possible from a lot of experimentation and prior issue reports.

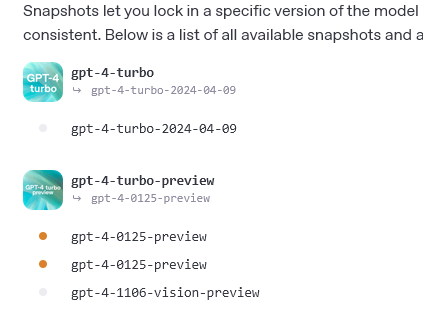

Tiles models

Sending an image 32,000px by 32px to gpt-4.1 (alternating 32x32 black and white):

The AI can see the patterns of what would become 2048x2 if you follow the formula page for resizing.

gpt-4.1 gives 779 input tokens reported by API on 32000 x 32. Aligning with calculations

Improper resize on tiled images, or really 2000 pixel limit?

I’m going to send this image. 8 tiles are used because 513 is over the 512px tile size.

Any resizing of 2048px to 2000px done would affect that smaller dimension, also, then being one tile high instead of two, but that doesn’t happen:

Note that we had to live for two weeks with bad documentation saying gpt-4.1 was “patches”.

Patches Models

These are ones with a 32x32 grid that is one token, and resizing until reaching max 1536 tokens.

gpt-4.1-mini however is dumbfounded at the same image previously described correctly, complete hallucination:

And then the tokens billed, or any size restriction, doesn’t align to anything in the pricing formulation section OpenAI provides - which doesn’t actually have a formula or algorithm, just guesswork for you.

one word, one 51200x32 image, similar billing:

We extrapolate back, and it may be only “patches” models that are having a 2000px size limit placed on them, despite that the model could take 1536 tokens of vision sent in a different dimensionality instead of a 63 token cap. If tiles models were similarly affected with resizes between 2000 and 2048, that would break the later documentation in the same page.

Conclusion

- Input size as a limit is wrong

- Patches resizing is not documented

- Tiles is not even resized to the stated “limit”

- 2000 pixels is a bad limit, it is 62.5 tiles. Could have been 64 tiles evenly. Or images could not be limited in dimension like that, but instead let the other algorithms keep whatever is sent under the 1536 token budget.

- documentation needs to be rewritten, just like everything else noted here.

Appendix

gpt-4.1-mini — that boy just ain’t right

This shows it isn’t just a fluke of skinny images resized to nothing: gpt-4.1-mini model just cannot see the checkerboard even, that we can plainly see it in the Prompts Playground preview.