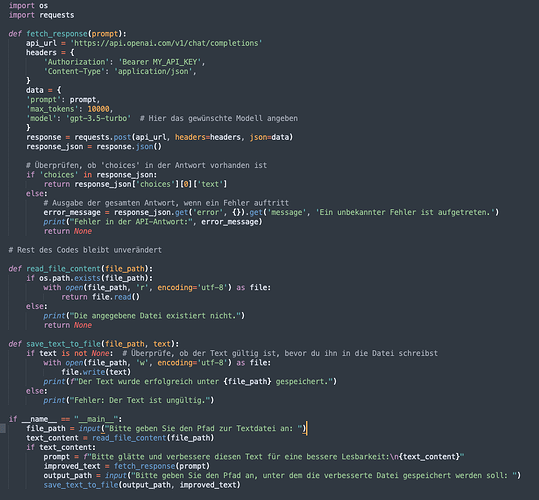

The problem is you are using an AI to write your code for you.

It doesn’t know how to use the API properly.

I thought I could expand on your code a bit, to make sure you have “messages” in the proper format. Plus a bit more.

import requests

import os

from typing import Optional

def get_openai_response(user_message: str,

system_message: Optional[str] = "You are an AI text processor.",

api_key: Optional[str] = os.getenv('OPENAI_API_KEY', 'sk-12345-key'),

http_openai_endpoint: Optional[str] = 'https://api.openai.com/v1/chat/completions',

model: Optional[str] = "gpt-3.5-turbo",

top_p: Optional[float] = 0.9,

max_tokens: Optional[int] = 1234) -> str:

"""

This function sends a POST request to the OpenAI API and returns the response.

Parameters:

user_message (str): The message from the user.

system_message (str, optional): The system message. Defaults to "You are Bio 101 bot.".

api_key (str, optional): The API key. Defaults to the value of the environment variable 'OPENAI_API_KEY' or 'sk-12345-key' if the environment variable is not set.

http_openai_endpoint (str, optional): The OpenAI API endpoint. Defaults to 'https://api.openai.com/v1/chat/completions'.

model (str, optional): The model to use. Defaults to "gpt-3.5-turbo".

top_p (float, optional): The top_p value. Defaults to 0.9.

max_tokens (int, optional): The maximum number of tokens. Defaults to 1234.

Returns:

str: The response from the API.

"""

# Prepare the body of the HTTP POST request that will be sent by the requests module

http_body_dictionary = {

"model": model, # This is the AI model compatible with the Chat Completions endpoint

"top_p": top_p, # Nucleus samping: Range 0.0-1.0, where lower number reduce bad tokens

"max_tokens": max_tokens, # This sets the maximum response the API will return to you

"messages": [ # This is the list of messages format that Chat Completions requires

{

"role": "system", # A system message gives the AI its identity, purpose

"content": [

{

"type": "text",

"text": system_message

},

]

},

{

"role": "user", # A user message provides a task or query

"content": [

{

"type": "text", # this format prepares you for sending images also

"text": user_message

},

]

}

],

}

# Prepare the headers of the HTTP request that will be sent by the requests module

http_headers_dictionary = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

try:

# Send the HTTP request and get the response, in an error-catching container

response = requests.post(

http_openai_endpoint,

headers=http_headers_dictionary,

json=http_body_dictionary

)

# If the request was successful, return the response

response.raise_for_status()

return response.json()["choices"][0]["message"]["content"]

except requests.exceptions.HTTPError as http_err:

print(f"HTTP error occurred: {http_err}")

except Exception as your_screwup:

print(f"An error occurred because of {your_screwup}")

def read_file_content(full_file_path: str) -> str:

"""

This function reads the content of a file.

Parameters:

full_file_path (str): The path to the file.

Returns:

str: The content of the file.

"""

try:

with open(full_file_path, 'r') as file:

content = file.read()

return content

except FileNotFoundError:

print(f"The file {full_file_path} does not exist.")

except IOError:

print(f"There was an error reading the file {full_file_path}.")

def save_text_to_file(full_file_path: str, ai_output_text: str, file_suffix: str = "-processed.txt"):

"""

This function writes text to a file.

Parameters:

full_file_path (str): The path to the file.

ai_output_text (str): The text to write to the file.

file_suffix (str, optional): The suffix to append to the file name. Defaults to "-processed.txt".

"""

output_file_path = full_file_path + file_suffix

try:

with open(output_file_path, 'w') as file:

file.write(ai_output_text)

print(f"Text successfully saved to {output_file_path}")

except IOError:

print(f"There was an error writing to the file {output_file_path}")

if __name__ == "__main__":

filename = input("AI text formatter. Input your file name to be processed:\n>>>")

try:

text_content = read_file_content(filename)

if text_content is not None:

user_message = ("Improve the structure of this text, formatting into paragraphs:\n" +

text_content)

response_text = get_openai_response(user_message)

print(f"got {response_text[:60]}...saving") # preview of text start

save_text_to_file(filename, response_text)

except Exception as e:

print(f"An error occurred: {e}")

Since I don’t speak German to know if an AI provided an accurate translation, I’ll let you turn some of this into German.

The openai library module may be a bit easier to use, but using the requests library as you do will ensure it doesn’t break on the whims of OpenAI pypi maintainers.