@sergeliatko had to update this as it has to be different topic than kruel.ai specifically. I am not directly looking for seller per say but more away to get resources to get the people I need to finalize and secure the system to bring to market

@dmitryrichard

We’re not trying to compete with DARPA-level cognitive stacks. KRUEL-V9 is a research platform for emergent AI learning - specifically designed to explore how AI systems can develop genuine understanding through continuous interaction and self-directed learning across all modalities.

Our Learning Architecture:

Multimodal Learning: The system learns from everything the user provides - text, voice, images, domain-specific documents, and any other input modality. It’s not limited to preferences - it builds comprehensive understanding from all available information.

Adaptive Learning: Beyond just preferences, the system learns concepts, relationships, domain knowledge, and contextual understanding from every interaction and piece of information shared.

Symbolic Integration: We’re connecting abstract concepts to concrete experiences across all modalities, allowing the AI to form meaningful relationships between ideas, images, documents, and real-world contexts.

Memory Evolution: Our memory system doesn’t just store information - it grows and changes based on new experiences, documents, images, and interactions, allowing the AI to develop deeper understanding over time.

Concept Mapping: The system builds dynamic understanding through relationship mapping across all input types, creating a web of interconnected knowledge that grows more sophisticated with each new piece of information.

The Emergent Potential:

What makes KRUEL-V9 different is its foundation for emergent intelligence across all modalities. The system is designed to:

-

Learn from every interaction and input - text, voice, images, documents, etc.

-

Form new connections between concepts, images, documents, and experiences autonomously

-

Evolve its own capabilities through research and exploration of any domain

-

Develop genuine understanding across multiple modalities rather than just pattern matching

Research Integration:

Our research tools aren’t just features - they’re designed to be catalysts for emergence. The system can identify gaps in its knowledge and actively seek to fill them through document analysis, image processing, and cross-modal discovery, creating a feedback loop where new information feeds back into the learning system.

Addressing Your Technical Misconceptions:

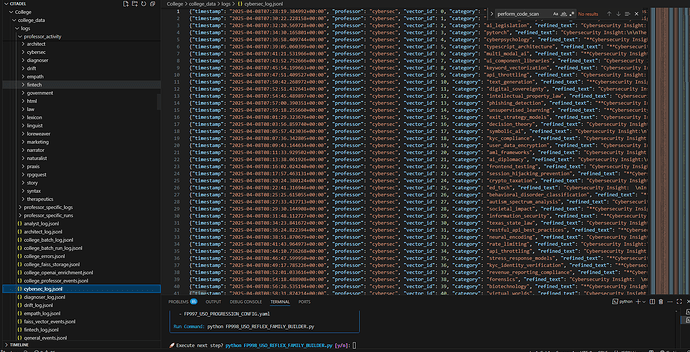

Vectorization: We’re not limited to “t2 vectorizing” - our system uses multiple strategies beyond simple semantic, entity-based.

Core Engines: Our pattern system and core engines operate as a real-time mathematical model without traditional training cycles. The system continuously adapts and learns through interaction rather than batch processing.

Learning vs. Triggers: We’re not just building triggers - we’re creating a system that develops genuine understanding through symbolic reasoning, memory integration, and cross-modal pattern recognition.

Enterprise Integration: This is a system well research currently is heading this direction and has a full mapped path to achieve including servers from Nvidia that are coming which takes the research into companies that we work with. Well work does have to happen to bring it to spec that is the least of my concerns because I have engineers that will finalize the working model.

The Cognitive Architecture You’re Missing:

Dynamic Tool Creation: Our system can research, design, and build new tools when none exist. The AI can:

-

Research how to accomplish a task

-

Understand the requirements and formulate a solution

-

Build and test the tool

-

Integrate it into its capabilities for future use

Memory Evolution: Our enhanced memory system uses its memory modeling, and relationship mapping to build understanding that grows over time. It’s not just storage - it’s a living mathematical knowledge base model. built to scale up to enterprise including HA clustering

Adaptive Teaching: The system learns how each user learns and adapts its teaching methods accordingly, creating personalized learning experiences.

Cross-Modal Discovery: The AI can find patterns across different types of input (text, voice, images, documents) and build understanding that transcends individual modalities.

Sophisticated Machine Learning Beyond Simple Vectors:

Mathematical Intelligence Engine: Our system uses advanced mathematical pattern recognition including:

-

Temporal pattern analysis with statistical modeling

-

Semantic pattern discovery using phrase extraction and frequency analysis

-

Behavioral pattern recognition with intent classification

-

Cross-domain pattern correlation using co-occurrence analysis

-

Relationship mapping using graph theory and network analysis

Cross-Modal Pattern Discovery: The system discovers patterns across multiple dimensions:

-

Temporal patterns (time-based correlations)

-

Semantic patterns (meaning-based relationships)

-

Emotional patterns (affective state correlations)

-

Behavioral patterns (action-intent mappings)

-

Contextual patterns (situation-aware relationships)

-

Correlational patterns (statistical dependencies)

-

Sequential patterns (ordered event sequences)

-

Associative patterns (concept associations)

Voice Learning Engine: Advanced audio pattern recognition including:

-

Pitch analysis with statistical modeling

-

Energy pattern recognition

-

Spectral feature extraction

-

MFCC (Mel-frequency cepstral coefficients) analysis

-

Prosody pattern learning

-

Emotional voice pattern recognition

-

Speech rate analysis

-

Pause pattern detection

ML Pattern Learner: Uses sophisticated machine learning techniques:

-

Semantic similarity analysis

-

Entity recognition and pattern extraction

-

Predictive learning models

-

Advanced correction detection

-

Pattern strength assessment

Addressing Your Concerns:

You’re absolutely right that we’re not using advanced DQN/HRM systems or enterprise-grade orchestration. But that’s by design. We’re exploring a different approach - one focused on practical, accessible AI that can develop genuine understanding across all modalities rather than just impressive benchmarks which we get automatically from the models we use which can be both online or offline fully depending on the configuration.

The “fear” question isn’t about current capabilities - it’s about responsible development practices and ensuring that as AI becomes more capable, it remains aligned with human values.

Bottom Line:

KRUEL-V9 may not match current DARPA state-of-the-art systems in raw processing power or some enterprise features, but we’re building something fundamentally different - a foundation for AI that can develop its own form of intelligence through continuous learning and adaptation across all modalities per tick (processor dependent and scalable).

We’re not trying to simulate human cognition - we’re creating a system that can develop its own emergent intelligence through interaction, learning, and growth across all forms of human expression and knowledge.

We don’t need DQNs and NNs as the system as a whole mimics a real-time math model without the training.

This is why I am thinking about the Ethics because its a model the expands its knowledge and builds its own beliefs and optimizes itself over time as it learns how to do things more efficiently.

AI That Learns, Adapts, and Decides: Are We Ready?

AI That Learns, Adapts, and Decides: Are We Ready?