Hi, so basically I write articles. Today, I gathered different comments from several forums related to my topic and pasted it into ChatGPT. But, to my surprise, it on ly cionsidred half of the text, the total word clunt was around 15,000 words. if it really as 128k context window then why it did that? I also asked its maximum token by asking a question and yoiu can see this answer in attached screenshot, I asked same question in API model, I used API in chatbotui com and answer was same. So, how Open Ai is claiming this? I am not just depending on this answer. As I said, I practically experienced by providing a long context.

That’s ChatGPT. Any model through ChatGPT is fairly nerfed compared to using it via the API. That’s why the API is so much more expensive than $20 a month.

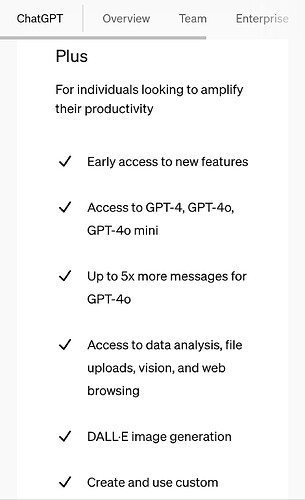

The context window for each model in ChatGPT varies depending on the plan, as stated on the Pricing page:

https://openai.com/chatgpt/pricing/

The Free plan offers 8K, the Plus and Teams plans offer 32K, and the Enterprise plan offers 128K.

@dignity_for_all

I can’t find the context window size specification on the pricing page.

This would be quite useful to know for sure.

Can you give me a pointer where to find it?

Here is a screenshot of what I see:

Here is the screenshot regarding the context window. It might be difficult to see clearly if you’re viewing from a mobile device.

What about API? Even GPT 4o API is not having 128K context window

I understand.

In my opinion, model specifications for LLMs are not properly communicated across the entire market, which is especially problematic for consumer products.

However, the API is a developer service. You need to share your code, the error message, etc., for us to support you effectively and efficiently.