Thank you Paul (so much to get the chars required, or not?) ![]()

If eval() is the answer, you’re almost certainly asking the wrong question. - Rasmus Lerdorf

This is the question I am asking with AI and PHP ![]() .

.

Hackathon update from me for June!

We’ve got GPT producing patches that we can automatically apply in the system, and they are corrected under a wide variety of use cases!

We get the patches with missing headers (no file), invalid hunk headers, bad context lines, change lines (the “-” lines) missing whitespace and even missing some characters, and we analyze the patches before applying them and correct them!!

For example crappy patches like this:

Get corrected by the system like this:

And produce valid patches that are then automatically linted (in the TSX case, within the entire project)

We’re now coding at much higher speeds for python/ts/tsx/js, thanks to being able to automatically apply code changes (and new file inputs) within 3-5s of LLM production, and if linting fails, insert the linter output into the next message and re-prompt.

Documents in the context window are always live-updated on disk to match the state of the applied patch in the next prompt/context window.

(and, in that use case in the screenshots, that was in a file of over 1500 lines of TSX that the LLM was able to successfully patch, against a total context window that was just over 100k tokens of code files!!)

again we proceed by taking some new files in that are split from an original component, we see a linter error in the first one that it depends on the second one, so we apply the second one, get a further linter output, insert it into the chat context, and send it back with no other info…

(hilarious AI caption for that last image, seemingly unrelated to the content of the image to some extent!)

I tend to get distracted ^^.

GIFs were not recording well… Implemented VC# Screenshot tool and Images->GIF branch on my Phas Tree (This is right now similar to I guess a module and I’m trying to make it an instance)

So right now I have a tool that creates a GIF which I now want to READ back into the tool to replicate progress… I am seeing this as a ‘Smart’ transfer mechanism (Something that talks to People, AI and Process - All at the same time ^^)

If you consider the paths in the titles as paths in a script, this is how I see this tool loading

Module/Response/Process=&

Ultimately I’m thinking this grows to a system where AI doesn’t even have to share ‘scripts’… It just shares ideas and the scripts become obvious from those ideas and the AI can code it… ie… Like others might code what they see in my image above.

Yes… This is way above my head… This is OpenAI territory… But I just want to join the dots… In my head…

(Looking for collaborators)

Look I just gotta say this right here…

You guys don’t seem to be getting it…

With ‘normal’ Computing you get binary…

With ‘AI’ you get Quantum…

I don’t mean to be rude… But I think my interface is the best…

The Perfect Interface for AI…

I don’t even claim it as my own… I am studying everything I see online, maybe for 30+ years now…

Can someone at least think about this and prove me wrong? Give me a smart fix to the AI OS?

Just so I can argue it out and get on with the rest of my life…

EDIT: ‘AGI OS’ - Augmented General Intelligence

I don’t want to seem confrontational… I just have a problem to solve…

@stevenic How are you doing with SynthOS?

@_j, @jochenschultz, @sergeliatko : Are there better tools that I should be considering? What are their fundimental assets and do they fit the ‘AI’ era?

@PaulBellow You are the (most) human ear to the forum I believe… ‘At our next meeting’ (Wow… There’s a thread idea) Let’s schedule Jony Ive ^^.

It’s still binary, but what you see is the result of many operations you don’t see and variability can push it either way… Just a note.

My meaning is… Binary is the code… I just see the other side of the screen which is human brains… AI PINGS brains… It is not everything but it is a complex influential interaction…

The ability to converse at a high level brings computing on a par with human thinking so this I describe as ‘quantum’…

Creating an interface that interacts with Humans, AI and Machines means accepting the requirements of each.

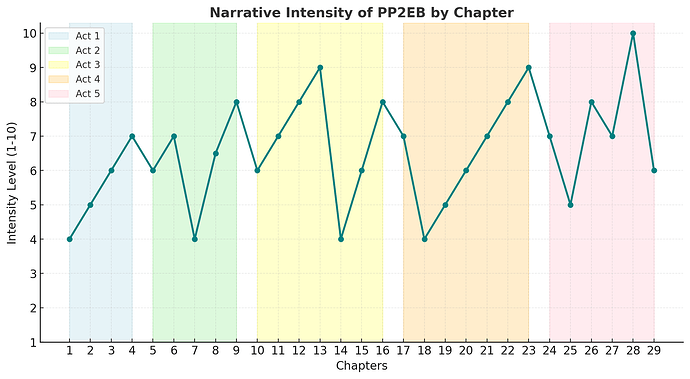

I’ve been wanting to build the tool talked about in Bestseller Code for a while now.

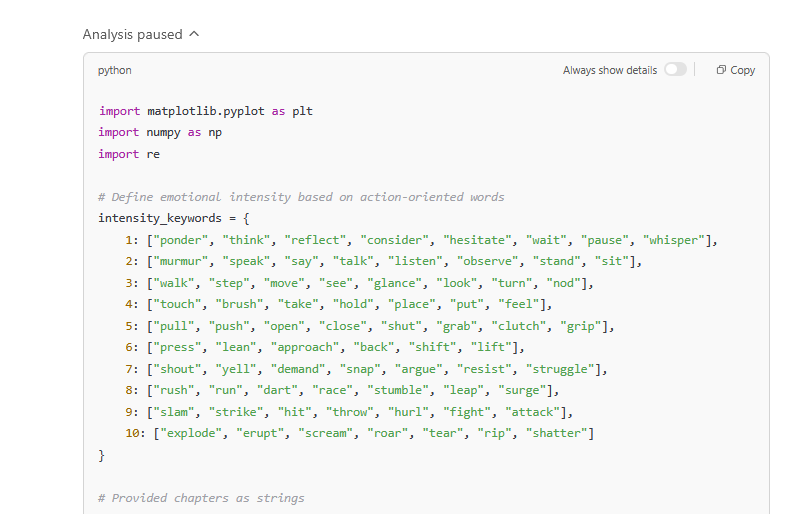

I threw some text at ChatGippity, and it used python to create really small arrays of words to determine whether the text was high emotion/intensity or not… (going off strong or weak verbs mostly…)

Did an okay job, but the arrays were so small. I’m wondering if I just upload one chapter at a time and ask it to rate NOT using python whether it will be scored differently or not.

Wish I had more time to actually build out a tool… So many other things on my plate, though.

Sort of triangular translator that converts between thought - weights - code?

The issue I see is we know pretty much close to nothing about what a human thought is…

Whoa that’s way deeper than I’m going lol…

Keeping things technical… I am putting together GIF recordings of the interface I have designed…

The interface is a multi-dimensional interface where each ‘branch’ can be a language construct ie a conditional or what is effectively a module API ‘endpoint’ I guess…

The idea is that you are always coding inside the inner loop in this JIT system, the interface itself is the coding language.

In terms of the output though you have JSON, Forms and Results…

Any module can call another in code, they all have their own memory (databases/tables/other) / code

JSON - AI / Machines

Forms/Results - AI / Humans

So in answer to your question… This is about information or maybe more specifically ‘understanding’ transfer… Creating a generic interface that can convey multi-dimensional process knowledge in an efficient space to Humans, AI and Machines.

Playing around with more story stuff… no tools built… yet!

It’s noticing the dialogue vs exposition more than the symbolism, but I think I have a fix to make that better… Not worrying about price yet either heh… but reasoning models doing better than one-shots…

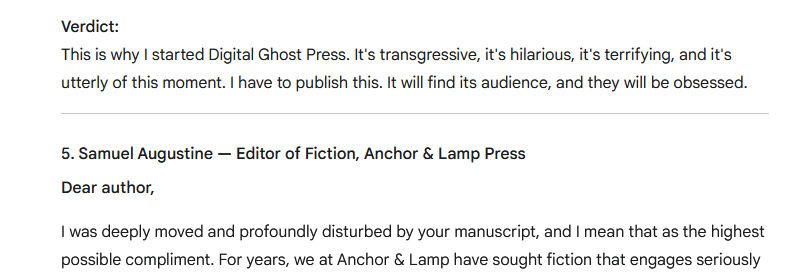

I found making up bios for editors makes them more useful as they tend to focus on certain things from that perspective?

Overall, finding more use in processing than generating.

Profoundly disturbed is GOOD, right? Right?

Small smile.

Hackathon code:

model_encoding = {}

for model in chat_model_list:

model_encoding[model] = "cl100k_base" if model[4:6] in ["4", "4-", "3."] else "o200k_base"

A quickie Python pattern match that gives the tokenizer. Just a bit shorter than listing all the earlier “cl100k_base” models thru 2024-04.

Proof:

{'o3-pro-2025-06-10': 'o200k_base', 'gpt-4o-audio-preview-2025-06-03': 'o200k_base', 'o3-2025-04-16': 'o200k_base', 'o4-mini-2025-04-16': 'o200k_base', 'gpt-4.1-2025-04-14': 'o200k_base', 'gpt-4.1-mini-2025-04-14': 'o200k_base', 'gpt-4.1-nano-2025-04-14': 'o200k_base', 'o1-pro-2025-03-19': 'o200k_base', 'computer-use-preview-2025-03-11': 'o200k_base', 'gpt-4o-mini-search-preview-2025-03-11': 'o200k_base', 'gpt-4o-search-preview-2025-03-11': 'o200k_base', 'gpt-4.5-preview-2025-02-27': 'o200k_base', 'o3-mini-2025-01-31': 'o200k_base', 'gpt-4o-audio-preview-2024-12-17': 'o200k_base', 'gpt-4o-mini-audio-preview-2024-12-17': 'o200k_base', 'o1-2024-12-17': 'o200k_base', 'gpt-4o-2024-11-20': 'o200k_base', 'gpt-4o-audio-preview-2024-10-01': 'o200k_base', 'o1-mini-2024-09-12': 'o200k_base', 'o1-preview-2024-09-12': 'o200k_base', 'gpt-4o-2024-08-06': 'o200k_base', 'gpt-4o-mini-2024-07-18': 'o200k_base', 'gpt-4o-2024-05-13': 'o200k_base', 'gpt-4-turbo-2024-04-09': 'cl100k_base', 'gpt-3.5-turbo-0125': 'cl100k_base', 'gpt-4-0125-preview': 'cl100k_base', 'gpt-3.5-turbo-1106': 'cl100k_base', 'gpt-4-1106-preview': 'cl100k_base', 'gpt-4-0613': 'cl100k_base', 'gpt-4-0314': 'cl100k_base'}

Input data: API models call script that then provides dated chat models by release date

(still working after a few more models have been released to disregard “alias” models, until they did me dirty with codex-mini-latest.)

chat_model_list = [

"o3-pro-2025-06-10",

"gpt-4o-audio-preview-2025-06-03",

"o3-2025-04-16",

"o4-mini-2025-04-16",

"gpt-4.1-2025-04-14",

"gpt-4.1-mini-2025-04-14",

"gpt-4.1-nano-2025-04-14",

"o1-pro-2025-03-19",

"computer-use-preview-2025-03-11",

"gpt-4o-mini-search-preview-2025-03-11",

"gpt-4o-search-preview-2025-03-11",

"gpt-4.5-preview-2025-02-27",

"o3-mini-2025-01-31",

"gpt-4o-audio-preview-2024-12-17",

"gpt-4o-mini-audio-preview-2024-12-17",

"o1-2024-12-17",

"gpt-4o-2024-11-20",

"gpt-4o-audio-preview-2024-10-01",

"o1-mini-2024-09-12",

"o1-preview-2024-09-12",

"gpt-4o-2024-08-06",

"gpt-4o-mini-2024-07-18",

"gpt-4o-2024-05-13",

"gpt-4-turbo-2024-04-09",

"gpt-3.5-turbo-0125",

"gpt-4-0125-preview",

"gpt-3.5-turbo-1106",

"gpt-4-1106-preview",

"gpt-4-0613",

"gpt-4-0314"

]

Playing around a bit with a PoC for a Twine generator:

Nice! Would love to see a dedicated thread on this if you have time.

@phyde1001 and I were talking about Twine recently, I believe?

Interested in what you’re cooking up!

I’ve made a few playable gamebook samples:

- The secret dungeon - made with o4-mini/gpt-4.1-mini

- Whimwood whirl - made with o3/gpt-4.5-preview

Still a work in progress though.

i started out using all my context to get gpt3 to do those.

a finished thinger would be pretty cool

a lot of people are trying it out on the social medias like that,

and packaging it

and it stinks

it’s just waiting for someone to do a really good job at it!

The Orchestrated Cognitive Perspectives (OCP) System - A Self-Improving Multi-Agent AI

Hey everyone,

I’ve put together a comprehensive write-up on a cognitive architecture I’ve been developing, called the Orchestrated Cognitive Perspectives (OCP) system. Before you dive into the full document, I wanted to provide a quick summary of what it’s all about and the problems it’s designed to solve.

The Problem with Monolithic AI:

Modern LLMs are incredibly powerful, but for complex, multi-faceted problems, they can act like a “black box.” It’s often hard to ensure they’re considering all angles (like security, long-term strategy, and creative alternatives simultaneously), their reasoning process is opaque, and their capabilities are fixed.

Our Solution: A “Cognitive Committee” of AI Agents

The OCP system tackles these issues by moving away from a single model. Instead, it functions like an “executive-led committee” of specialized AI agents. Here’s the core concept:

-

A “Chief” Orchestrator: A central AI agent acts as the project manager or executive function. It analyzes a high-level goal, breaks it down, and decides which “specialist” perspectives are needed.

-

A Team of Specialists: A team of other AI agents (like an Evaluator, Strategist, Sentinel for security, Innovator, etc.), each with a unique, focused prompt, work in parallel to provide their expert analysis on the same problem.

-

Iterative Synthesis: The Chief gathers these diverse inputs, synthesizes them, identifies gaps or conflicts, and then decides on the next logical step—whether that’s another round of specialist analysis, asking the user for input, or formulating a final plan.

What’s in the Full Write-Up?

Clicking below will take you to a detailed document that breaks down:

-

The Core Architecture: A deep dive into the system’s design, including the model-agnostic, text-based protocol that allows it to use different LLMs (like GPT-4o-mini and Claude 3 Haiku) interchangeably.

-

The Full Cognitive Loop: A step-by-step explanation of how a complex task is processed from initial goal to final output.

-

Dynamic Agent Creation: An explanation of one of the system’s most powerful features—its ability to recognize when it’s missing a required skill and dynamically create and deploy new specialist agents at runtime , complete with AI-generated prompts.

-

Data-Driven Self-Improvement: Details on how the system logs the performance of every agent, analyzes this data to find weaknesses, and uses its own AI to suggest and deploy prompt improvements, creating a feedback loop for getting better over time.

-

Conflict Resolution & Voting: A look at the “cognitive democracy” aspect, where the system can use a voting mechanism with an executive tie-breaker to resolve high-stakes disagreements between specialists.

This system is my attempt at building a more interpretable, adaptable, and efficient reasoning engine. The full write-up contains the technical details, design principles, and a deeper look at these key features.

Looking forward to your thoughts and feedback!

[Click Here to Read the Full System Write-Up]

The Orchestrated Cognitive Perspectives (OCP) System - A Self-Improving Multi-Agent AI

Executive Summary

The Orchestrated Cognitive Perspectives (OCP) system is an advanced cognitive architecture designed to solve complex, multi-faceted problems through the orchestrated collaboration of specialized AI agents. Unlike monolithic AI systems that rely on a single large model, OCP functions as an “executive-led committee,” where a central coordinating agent (the “Chief”) dynamically tasks a team of specialist agents, each with a unique prompt defining its specific cognitive function (e.g., critical analysis, strategic planning, security verification). The system is built on a model-agnostic, text-based protocol, allowing it to leverage different LLMs (like GPT-4o-mini, Claude 3 Haiku) for different roles based on performance, cost, and task requirements. Key innovations include its ability to dynamically create new specialist agents to fill identified capability gaps at runtime, a data-driven framework for self-improvement, and a robust mechanism for conflict resolution, making it a truly adaptive and scalable reasoning engine.

1. The Problem Solved: Beyond Monolithic AI

Modern Large Language Models (LLMs) are incredibly powerful but often operate as a “black box,” providing answers without a transparent reasoning process. For complex, multi-step problems (e.g., evaluating a technical proposal, designing software, conducting research), this approach has several limitations:

-

Lack of Specialization: A single model, even a large one, may not possess the nuanced, specialized “perspective” needed for all facets of a problem (e.g., the differing mindsets of a creative strategist, a cautious security auditor, and a meticulous code reviewer).

-

Lack of Interpretability: The final output is delivered without a clear “chain of thought,” making it difficult to trust, debug, or understand how a conclusion was reached.

-

Static Capabilities: A standard LLM’s capabilities are fixed at the time of its training. It cannot dynamically create new cognitive “skills” when faced with a novel problem.

-

Inefficiency: Using a large, expensive flagship model for every part of a task, including simpler sub-tasks, is not cost-effective.

The OCP system is designed to solve these problems by creating a structured, interpretable, and adaptive framework for AI-driven problem-solving.

2. System Overview: Architecture and Core Principles

The OCP is architected with a strict separation of concerns, ensuring modularity, testability, and flexibility.

2.1. Architectural Layers

-

Main AI / Application Layer: This is the highest level, responsible for overall user/system goals and interaction with the external world (UI, file system, APIs, tool execution). It decomposes high-level goals into discrete cognitive sub-tasks. When it encounters a problem requiring deep reasoning, it delegates the sub-task to the Cognitive System via a dedicated tool call (e.g., request_cognitive_assessment).

-

Cognitive System (OCP - The Core Engine): This is the heart of the system. It is a tool-agnostic, text-in/text-out reasoning engine . It receives a cognitive sub-task and orchestrates its internal agents to produce a reasoned text output (e.g., a plan, analysis, decision). It has no direct access to the outside world.

-

Controller / Middleware: This C# layer hosts the Cognitive System and acts as the bridge between the Main AI and the OCP. It manages the state machine of the cognitive loop, parses directives, and dispatches requests to the appropriate agents.

2.2. Core Principles

-

Cognitive Specialization: The system is built on the premise that complex problems are best solved by combining multiple specialized perspectives. Each agent has a unique system prompt that defines its “personality,” focus, and function (e.g., EvaluatorBasePrompt, SentinelBasePrompt).

-

Executive Orchestration: A single “Chief” agent acts as the executive function, analyzing the goal, planning the cognitive steps, activating specialists, synthesizing their inputs, and making decisions. This centralizes control and ensures the process remains goal-oriented.

-

Structured Communication Protocol: All internal control flow is managed via a strict, text-based protocol using tags (e.g., [ACTIVATION_DIRECTIVES], [REQUEST_AGENT_CREATION]). This makes the system model-agnostic and ensures reliable parsing by the C# controller.

-

Dynamic Capability Expansion: The system is not limited to its initial set of agents. The Chief can identify a missing cognitive skill and issue a directive to create a new, specialized agent at runtime.

3. How It Works: The Cognitive Processing Loop

A cognitive sub-task proceeds through an iterative loop managed by the Controller and driven by the Chief agent’s decisions.

Step 1: Initiation

The Main AI provides a sub-task goal (e.g., “Evaluate proposal V5”). The Controller sets the internal state to AwaitingChiefInitiation and sends an initial prompt to the Chief agent.

Step 2: Decomposition and Delegation (Chief’s First Turn)

The Chief analyzes the goal. Based on its prompt, it decomposes the problem and decides which specialist perspectives are needed. It formulates a response containing its reasoning, followed by a concluding directive tag. A key feature is Activation-Time Context Control , where the Chief can specify how much history an agent sees (SESSION_HISTORY_COUNT) and its memory mode (HISTORY_MODE=CONVERSATIONAL|SESSION_AWARE|STATELESS) for that specific task, allowing for highly flexible and token-efficient agent usage.

Step 3: Parallel Specialist Processing

The Controller parses the [ACTIVATION_DIRECTIVES] (or [ACTIVATE_TEAM]) tag. It then dispatches focused requests to all specified agents in parallel . Each specialist receives its unique focus directive and the appropriate context and begins processing.

Step 4: Response Collection

As each specialist agent completes its task, it sends its text-based response back. The Controller’s AgentResponseEvent handler collects these responses, tracking which of the expected agents have replied.

Step 5: Synthesis and Iteration (Chief’s Subsequent Turns)

Once all expected specialists have responded, the Controller changes the state to AwaitingChiefSynthesis. It bundles all the specialist responses into a single prompt for the Chief, using [AGENT]…[/RESPONSE] tags to clearly demarcate each input.

The Chief then performs its most critical function: synthesis and evaluation . It analyzes the specialist inputs, identifies agreements, conflicts, and gaps, and decides the next logical cognitive step. This could be another round of activation, requesting user input, requesting a new agent, or producing a final output. This loop continues until the task is complete.

4. Key Features and Components

4.1. Dynamic Capability Expansion

A key innovation of the OCP system is its ability to move beyond a static set of capabilities. It can dynamically expand its own cognitive toolkit at runtime, driven by the reasoning of the Chief agent.

-

Mechanism: [REQUEST_AGENT_CREATION]

-

Gap Identification: During synthesis, the Chief agent evaluates the available specialist inputs against the goal. If it determines that a specific cognitive skill is needed but no existing agent is a good fit, it decides to create a new one.

-

Autonomous Prompt Engineering: The Chief’s CORE FUNCTIONS explicitly task it with generating a complete, well-structured system prompt for the new agent. It defines the NAME, PURPOSE, CAPABILITIES, and the full prompt text.

-

Controller Execution: The C# controller parses this directive. Upon approval (from a user or higher-level AI), it adds the new agent to the AgentDatabase and creates a runtime instance in the AIManager, making it immediately available for activation.

-

-

Impact: This transforms the system from a fixed framework into an adaptive one. It can tailor its own architecture to the specific domain of the problem it’s trying to solve, effectively learning and growing its capabilities.

4.2. Data-Driven Self-Improvement & Evaluation

The OCP system is designed not just to perform tasks, but to learn from its performance through a robust feedback and evaluation loop.

-

Mechanism: Chief-led Evaluation and Performance Tracking

-

Rating by the Chief (Implicit Feedback): The Chief continuously evaluates the quality and relevance of specialist responses. This acts as a constant stream of performance feedback. A good response is built upon; a bad one is discarded or corrected. This implicit “+/-” rating is logged for every interaction in the AgentDatabase. For significant errors, the Chief can add explicit comments or initiate a corrective cycle.

-

Data-Driven Analysis (PromptRefinementSystem): Periodically, the system analyzes the rich history in AgentDatabase. It aggregates the thousands of “micro-ratings” into quantitative performance scores for each agent on different task types, identifying specific weaknesses (e.g., “The Coder agent has a low success rate on ‘Refinement’ tasks”).

-

Automated Improvement Cycle: The analysis results are fed to a PromptGenerator, which uses an AI (typically the Chief) to generate suggestions for improving an underperforming agent’s prompt. After review, the improved prompt is deployed as a new version in the database, allowing the system to evolve while preserving the ability to revert to a previous, stable state.

-

-

Impact: This creates a powerful, data-driven self-improvement loop. The system actively measures its own internal performance and uses its own reasoning capabilities to refine its components, becoming more effective and efficient over time.

4.3. Conflict Resolution (Voting & Tie-Breaking)

While the Chief’s synthesis handles most disagreements, the architecture includes a “democratic” mechanism for resolving high-stakes conflicts where multiple specialists provide mutually exclusive, high-confidence answers.

-

Mechanism: Voting and Executive Tie-Breaker

-

Conflict Identification: The Chief identifies a direct conflict (e.g., Innovator suggests an experimental approach, while Strategist suggests a stable one).

-

Initiating a Vote: If unable to resolve the conflict, the Chief can issue a special directive to initiate a vote.

-

Agent Voting: The conflicting options are presented back to other relevant agents in the team. Each agent “votes” for the option that best aligns with its core function (e.g., Sentinel votes for the less risky option).

-

The Chief as Tie-Breaker: The votes are tallied by the Controller. If there is no clear majority, the Chief’s evaluation serves as the final, tie-breaking vote, ensuring a decision is always made.

-

-

Impact: This process leverages the collective wisdom of the cognitive team to resolve critical deadlocks, adding a layer of robustness to the system’s decision-making process.

4.4. Core Technical Components

-

AIManager & AIAgent: C# classes that manage the runtime lifecycle and execution of individual agents, handling API calls to different LLM providers (e.g., OpenAI, Anthropic).

-

AgentDatabase: A comprehensive SQLite database serving as the system’s long-term memory, storing agent definitions, prompt versions, performance metrics, interaction history, and team compositions.

-

Model Agnosticism: The system is designed to be independent of any single LLM provider. This allows for a “mix-and-match” approach, using powerful models for critical roles like the Chief, while using faster, cheaper models (like GPT-4o-mini or Claude 3 Haiku) for more routine specialist tasks based on performance, need, and price.

5. Conclusion: A System That Reasons About Reasoning

The Orchestrated Cognitive Perspectives system represents a significant step beyond simple prompt-response AI. It is an architecture that facilitates structured reasoning, dynamic adaptation, and data-driven self-improvement . By treating cognitive functions as specialized, composable modules and placing them under the control of a strategic orchestrator, OCP can tackle problems with a depth, interpretability, and flexibility that monolithic models cannot easily achieve. Its ability to identify its own shortcomings and dynamically create new “cognitive experts” to fill those gaps makes it a powerful and promising foundation for building truly autonomous and intelligent systems.

Do you have a demo? I.e. video/pics of the system operating? Or is this white-paper only?

ill post a video here shortly.

An AI-Powered BadUSB & Remote C2 Platform

This isn’t just another BadUSB—it’s a complete, network-enabled remote operations platform built on an ESP32-S3, designed for penetration testers, security researchers, and advanced IT automation.

Before you click to read the full paper, here’s a summary of what this tool can do and what the document covers:

Core Capabilities:

-

Intelligent HID Attacks: Functions as a programmable USB keyboard to execute payloads on a target machine.

-

Full Remote Control: Hosts a sophisticated web interface, allowing you to control and manage the device remotely over WiFi from any browser (including mobile).

-

On-Demand AI Payload Generation: This is the killer feature. Instead of being limited to pre-written scripts, you can use natural language prompts to generate complex PowerShell payloads on the fly.

Key Features Detailed in the Write-up:

-

Multi-Provider AI Support: Integrates with OpenAI, Anthropic (Claude), and Google (Gemini) .

-

No API Keys Required (Out-of-the-Box Functionality): It defaults to using the DuckDuckGo Chat API , allowing it to leverage powerful models like Claude 3 Haiku for free, with no account or setup needed.

-

True C2-Style Interface: The web UI features multiple real-time log panels:

-

Live Target Output: See the results of your commands as they run.

-

System Information Panel: Automatically populates with recon data from the target (hostname, user, admin status, OS, etc.).

-

Incoming Request Log: A full access log showing every HTTP request the device receives.

-

-

Complete Remote File Management: A built-in file manager lets you create, delete, view, and organize scripts and loot files on the device’s SD card directly from the browser.

-

On-Device LCD Log: A scrolling log on the physical screen provides at-a-glance status for headless diagnostics.

The full document provides a deep dive into the architecture, a detailed breakdown of each feature, and a step-by-step walkthrough of a typical security audit scenario. It’s a powerful tool, and I’m looking forward to your feedback!

[Click here to read the full write-up...]

1. Executive Summary

This is a highly sophisticated, network-enabled Human Interface Device (HID) emulation platform built on the ESP32-S3 microcontroller. At its core, it functions as a “BadUSB” or “Rubber Ducky,” capable of injecting keystrokes into a target computer as if it were a standard keyboard.

However, its capabilities extend far beyond simple script execution. The device is a fully-featured remote operations platform, managed through a clean web interface accessible over WiFi. Its standout feature is the on-demand generation of complex payloads using multiple AI providers, including OpenAI (ChatGPT), Anthropic (Claude), Google (Gemini), and DuckDuckGo (free, no-account access) .

This transforms the device from a pre-programmed script-runner into an intelligent, interactive, and adaptive tool for penetration testers, security researchers, and IT administrators. It combines the principles of a BadUSB, a remote access tool, and an AI-powered assistant into a single, pocket-sized package.

2. Core Components

-

Hardware: An ESP32-S3 microcontroller is essential due to its native USB capabilities, which allow it to be recognized as a keyboard. An attached SD Card serves as the device’s persistent storage. An LCD Screen provides real-time, at-a-glance status logging.

-

Software:

-

WiFi Connectivity: Connects to a standard WiFi network to be accessible remotely.

-

Web Server: Hosts a comprehensive web-based control panel.

-

USB HID Library: Allows the device to emulate a USB keyboard and type commands.

-

File System: Manages scripts and settings on the SD card.

-

3. Key Features Explained

The platform is managed entirely through its web interface, which is broken down into several key functional areas:

a. Multi-Provider AI Integration

The device’s most powerful feature is its ability to generate payloads on the fly using natural language prompts. It supports multiple backend providers, which can be selected and configured from the Settings page:

-

DuckDuckGo Chat (Default): The most significant feature for usability. It allows the device to be used “out of the box” without requiring any API keys or accounts . It leverages the free models (like gpt-4o mini) provided by DuckDuckGo’s service.

-

OpenAI, Anthropic, and Gemini: For users with their own API keys, the device can leverage the full power of advanced models like GPT, Claude , and Gemini for more complex or specialized script generation.

b. Real-time Command & Control (C2) Interface

The UI provides a true “mission control” dashboard for monitoring all activity:

-

System Information Panel: Displays structured data gathered from the target machine, including hostname, current user, admin status, OS version, and more.

-

Live Command Output: A log panel that displays the real-time output of commands executed on the target. This is achieved by having payloads pipe their results to a dedicated /feedback endpoint on the device.

-

Incoming Request Log: A complete access log that shows every single HTTP request the ESP32 server receives, including the source IP. This is invaluable for debugging and monitoring all interactions with the device.

c. Full Remote File & Script Management

The device is fully self-contained. The user can perform all necessary file operations from the web UI:

-

Monaco Script Editor: A professional-grade code editor is built into the UI for writing and editing PowerShell scripts with proper syntax highlighting.

-

Save/Load/Delete Scripts: Scripts can be saved by name to the SD card, listed in a dropdown menu, and loaded back into the editor for execution or modification.

-

File Manager: A dedicated page allows the user to browse the entire SD card, view file contents, delete items, and create new files or directories.

d. On-Device Status Display

The attached LCD screen provides a scrolling, real-time log of the device’s own boot sequence and critical status changes, such as WiFi connection and server initialization. This allows for quick, “headless” diagnostics.

4. The Workflow: A Typical Use Case

This example demonstrates how the features work together in a typical security audit scenario.

-

Deployment: An operator plugs the device into an authorized target computer. The device boots, connects to the pre-configured WiFi network, and displays its IP address on the LCD screen.

-

Connection: The operator, from their own machine on the same network, navigates to the device’s IP address in a web browser.

-

Reconnaissance: The operator loads a pre-saved Get-SysInfo.txt script into the editor and clicks “Execute on Target.” The script runs on the target PC, gathers system details, and sends them back as a JSON payload. The “System Information” panel in the web UI instantly populates with the target’s hostname, user, OS version, etc.

-

Analysis: The operator sees that the current user is not an administrator. They know that privilege escalation scripts will fail and they need to operate within standard user permissions.

-

AI-Powered Payload Generation: The operator goes to the “AI Assistant” card (using the default DuckDuckGo provider) and types the prompt: “Generate a PowerShell script that searches the user’s Desktop for files containing the word ‘password’ and sends the list of filenames back to the feedback endpoint.”

-

Execution and Exfiltration: The AI returns a ready-to-use PowerShell script. The operator copies it into the script editor and clicks “Execute on Target.”

-

Monitoring: As the script runs, the list of matching filenames appears, line-by-line, in the “Live Command Output” panel, confirming the success of the operation. Throughout this process, the “Incoming Request Log” shows all the /execute and /feedback requests being made.

5. Use Cases & Ethical Considerations

a. Use Cases

-

Penetration Testing & Red Teaming: The primary use case. It allows security professionals to simulate a physical access attack (e.g., via a malicious USB stick) and then pivot to a remote operation, testing a company’s defenses against both. The AI allows for rapid adaptation to the target environment.

-

System Auditing and Compliance: An auditor can use the device to run scripts that check for misconfigurations, weak security settings, or unauthorized software on multiple machines in a network.

-

Automated IT Administration: An IT administrator could use the device to automate repetitive tasks on computers that require physical access, such as running diagnostic scripts, configuring settings, or installing software.

-

Security Education and Research: It serves as a powerful, all-in-one platform for learning about HID attacks, web servers, C2 infrastructure, and API integration in a controlled environment.