I began this thread out of the need to try and find an automated approach to Hierarchical | Semantic chunking of documents for embeddings. I conceived the concept of “Semantic Chunking” back in early 2023, which I documented in a video: https://youtu.be/w_veb816Asg?si=NXAdb0lULG_-Y1l4

While I developed code to break down extracted text from PDFs hierarchically:

This is a document hierarchy header file, explained here: https://youtu.be/w_veb816Asg?si=hx7vo4x2vep-Muuj&t=386

It was still a fairly manual process, especially if I decided to use PDFs instead of extracted text. And, if the hierarchical chunks were larger than my chunk size limit, I still needed to break them down into smaller “sub-chunks”. For this, I continued to rely on the “rolling window” chunk methodology, which essentially cuts the document text into overlapping segments of a specific length.

What I wanted to do was develop a more automated approach which would not only preserve the document hierarchy in the embedding, but would also make sure the chunks did not exceed the chunk limit, and that those resulting “sub-chunks” were semantically organized to preserve their “atomic idea” in the resulting embeddings. The “atomic idea”, as @sergeliatko puts it, being the “ideal chunk” containing only one idea which will always match, at least theoretically, in cosine similarity searches, a similar idea posed in a RAG prompt.

I basically refer to this as “Semantic Chunking”, and my feeling was that we should be able to use GPT-4o to accomplish it.

This thread explored three approaches to “Semantic Chunking”. The first, introduced by @sergeliatko, focused on capturing the “atomic idea” on the sentence level and then building out from there. I call it the “inside out” approach. The second, mines, used a “layout aware” approach that would analyze the hierarchy of the document then drill down the hierarchical levels to capture the “atomic ideas” at the lowest levels. I call it the “outside in” approach. Finally, the @jr.2509 approach appears to fall somewhere between the other two. This is my overview summary – please feel free to offer more detailed explanations in comments to this post so that others can understand the potential benefits of each approach.

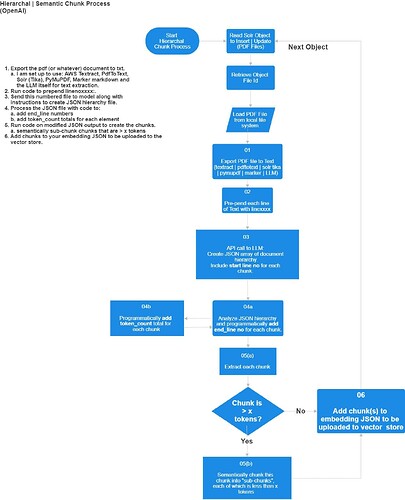

So, after much discussion and valuable ideas and insights contributed, I was able to finally come up with a process which I have implemented in my embedding pipeline:

As a failsafe measure, if at any point in this process there is a failure, it will automatically revert back to the “rolling window” approach mentioned earlier.

My embedding pipeline allows for customized configurations based upon the document classification, so this approach should work for me long-term as I can easily modify prompts, chunk sizes, extraction scripts, etc…

The one thing that would help tremendously is if OpenAI (or Google) would increase the output token limits. Right now, if I have a 750 page document, I have to write a custom script to create the first line semantic chunking described in the video. If the LLM could return more than 8K tokens, I could easily have it return a JSON file that could then be used with a default script to always be able to create document hierarchy header files. So, until then, that still has to be a manual process. But for smaller (less than 100 page) documents, it’s fully automated Baby!

If there no further discussion on this particular topic, I will mark this as the solution for me. I know @jr.2509 has mentioned expanding this out to document comparisons, but I think we should start another thread for that discussion as I would also like to discuss how to achieve more comprehensive results from RAG queries.