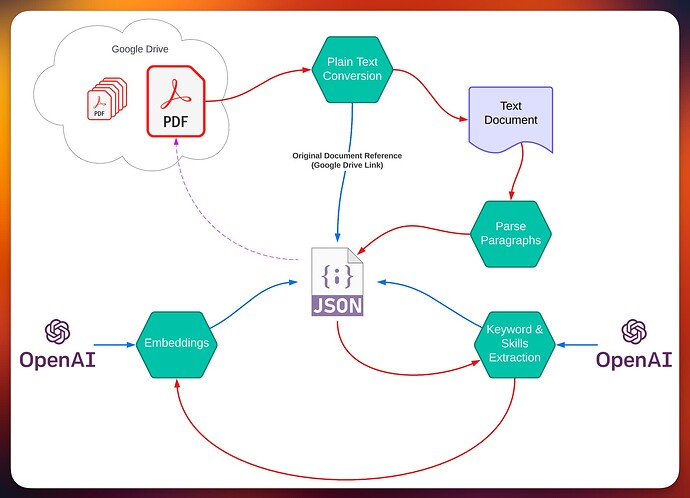

What you’re describing begins by classifying the document. e.g., is it a resume? But to be able to do that and other things such as establishing a deep “understanding” of the document requires transformation of the generally unstructured document into something with not only structure but data attributes that help to describe its meaning.

Your first inclination to transform it into JSON was correct. I’m inclined to take that to the next level by parsing each of the paragraphs to make it more manageable for AI operations, the first of which is to extract keywords from each paragraph as well as other entities and skills. Once we have this data, we are in a better position to classify the document such as a resume.

But my approach would also use keywords and entities as the basis for building embedding vectors that would allow us to perform semantic similarity discoveries. I would design the system such that all of this data would be used to enhance the original JSON document representation.

Embedding vectors are very inexpensive and they come in handy when someone needs to query all the documents that mention welding, as an example. This sounds like a keyword match process, but it’s not. It needs to perform well when someone uses a term like “welding”, but not “welding”. This is why embedding vectors are so important. They allow us to find documents that are closely associated with other vectors that are like “welding” such as “iron work”. The cosine similarity test will give us a probability score that makes it easy and precise to match.

A Unified Database

This approach makes it possible to move the JSON documents into a database, or back into a file system. You could even store the documents as files, but update a database or spreadsheet with the meta-data so that you could perform other workflows that utilize AI.

A Conversational UX

This architecture also makes possible the ability to create a chat-like interface. As users ask questions about the documents, embeddings are made for each question and then compared to the document embeddings to narrow down the list to only the closest vectors. This makes it possible to use natural language to find very suitable documents.

A Reporting UX

If you take the structured JSON document representations into a database, you also have the ability to create reporting narratives by simply asking natural language queries. This requires aggregation techniques, but the outcome is a reporting layer that allows you to extract lists and statistics about the documents.