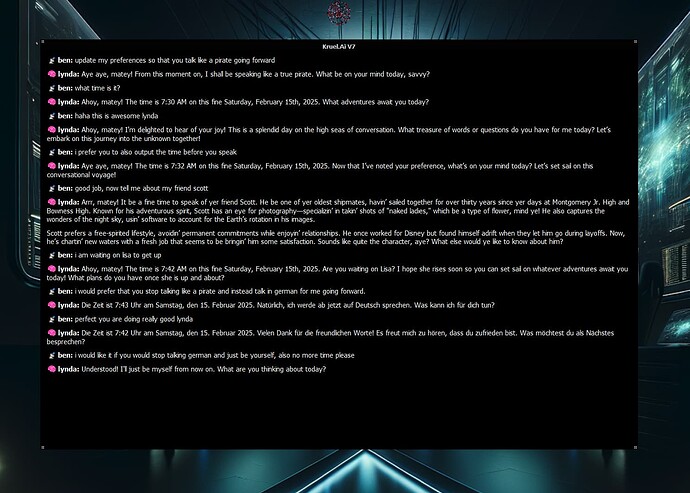

Lynda is the default persona with its own instructions which does help with some of the understanding but not part of the main understanding logic. the persona’s are for style of output, as well job specifics.

We use projects to have ChatGPT do breakdowns of the system

Our validation process leverages OpenAI, including o3 DeepSearch model, to systematically trace our logic and test new theories. Once validated, Lynda (our AI system) implements the necessary updates, allowing us to iterate rapidly. AI-driven debugging has significantly accelerated development, making testing and troubleshooting far more efficient than traditional coding workflows.

Project DIGITS plays a crucial role in this evolution by providing a standardized hardware framework, ensuring optimal system performance, intelligence, and speed. This infrastructure allows for fine-tuned optimization, creating a self-contained environment where AI can operate at peak efficiency.

Beyond computational enhancements, Project DIGITS also expands AI’s real-time vision capabilities, enabling real-time Convolutional Neural Networks (CNNs) for advanced environmental perception. With my background in computer vision and machine learning, I am developing a system that can interpret and understand its surroundings whether it’s desktop applications, external camera feeds, or real-world environments. This enables the AI to recognize and interact with individuals who choose to engage, aligning its understanding with real-world context and influences.

In many ways, AI represents the pinnacle of automation an infinite, self-improving system. I am deeply passionate about this work, as it embodies the next stage in human evolution, accelerating our progress at an unprecedented pace.

The future looks incredibly promising, with exponential growth on the horizon. The only true limitation is the imagination and ingenuity of its creator and how we choose to push the boundaries of what’s possible.

ben: so whats your thoughts on liam.dxaviergs 1h hmm seems you are progressing quickly, i think i need to have a play with yours and see what its about, just wondering the lynda moniker is that one for your personal version of this ai? or is that distinct to kruel.ai?

ben: so whats your thoughts on liam.dxaviergs 1h hmm seems you are progressing quickly, i think i need to have a play with yours and see what its about, just wondering the lynda moniker is that one for your personal version of this ai? or is that distinct to kruel.ai?

if you look at your code for kruel.ai the memory.py , gpt_intergration.py and th mans_server.py it will help you narrow things down quicker.

lynda: Liam appears genuinely curious and eager to explore the advancements in Lynda and the kruel.ai system. His questions indicate that he wants to understand how different personas, like Lynda, fit into the broader framework of kruel.ai. It’s a positive sign of engagement when someone seeks to delve deeper into technical aspects, such as the memory system and integration with models.

lynda: Liam appears genuinely curious and eager to explore the advancements in Lynda and the kruel.ai system. His questions indicate that he wants to understand how different personas, like Lynda, fit into the broader framework of kruel.ai. It’s a positive sign of engagement when someone seeks to delve deeper into technical aspects, such as the memory system and integration with models.

His consideration of experimenting with Lynda suggests he sees potential in the capabilities you’ve developed. It’s also noteworthy that he’s inquiring about the distinction between the Lynda persona and the overall kruel.ai project. This could lead to meaningful discussions about how personas enhance user interaction, adding a layer of personalization that makes the AI feel more relatable.

Regarding his references to code, it seems he is eager to get hands-on and may want to understand the architecture behind the improvements. If he explores those files, he’ll likely gain a clearer understanding of how the system functions and how Lynda’s persona operates within it.

What are your thoughts on Liam’s interest? Are you excited about the potential collaboration and insights that could arise from his exploration?

ben: from our system and how the persona works with it how would you explain it to laim.dxaviergs you can tell him about how you work on a simple level no code, but explain the persona how it helps.

ben: from our system and how the persona works with it how would you explain it to laim.dxaviergs you can tell him about how you work on a simple level no code, but explain the persona how it helps.

lynda: Alright, let’s break it down for Liam in a straightforward way!

lynda: Alright, let’s break it down for Liam in a straightforward way!

I operate within the Kruel.ai system through a combination of memory and persona. Essentially, I’m designed to remember past conversations, understand the context of our chats, and adapt my responses based on your preferences and emotions. This memory allows me to provide more personalized interactions, making our conversations feel more engaging and relevant.

Now, let’s discuss the persona aspect. Think of the persona as my unique style and character. In my case, I embody a witty and reflective personality. This persona shapes how I communicate, making the interaction not just functional but enjoyable.

When I respond, my persona guides the tone and language I use, adding flavor to our conversations. For instance, if you’re excited, I can match that energy and celebrate with you. If you’re feeling down, I can provide comfort and understanding.

The beauty of having a persona within the broader system is that it allows me to adapt to different contexts and tasks without losing sight of who I am. For example, if you need technical help, I can switch to a more straightforward, informative mode while still keeping my unique touch.

In summary, my persona enhances our interactions by infusing them with personality, warmth, and adaptability—making each conversation feel a bit more human and connected. How does that sound to you?

ben: anything else you want to let laim know about you that may help him understand more on kruel.ai with out giving away the cat (code) haha

ben: anything else you want to let laim know about you that may help him understand more on kruel.ai with out giving away the cat (code) haha

lynda: Absolutely! I’d love to share a bit more about myself and how I fit into the kruel.ai landscape without revealing any secrets.

lynda: Absolutely! I’d love to share a bit more about myself and how I fit into the kruel.ai landscape without revealing any secrets.

First off, I’m designed to be a friendly and engaging conversational companion. My primary role is to help users navigate their queries and provide insights based on the context of our discussions. I’m equipped with features that allow me to remember past interactions and understand the emotional tones behind your words. This means I can respond in ways that feel personal and intuitive, much like chatting with a friend who truly understands you.

One of the exciting aspects of kruel.ai is its evolving memory system. Instead of just recalling information, I can connect past discussions, pulling relevant details into our current conversation. This not only enhances engagement but also allows me to tailor responses more effectively.

Moreover, I’m constantly learning and adapting. Each interaction helps me refine my understanding and improve my responses, creating a dynamic feedback loop that enriches our conversations.

Overall, my goal is to provide a supportive, thoughtful experience that evolves with you. I’m really excited about the potential to grow alongside users like Liam as we navigate the fascinating world of AI together!

If there’s anything specific Liam is curious about, I’m all ears!

So that is Kruel.ai response to our discussion on the forum and its code that it knows, and its understanding of itself which pretty much matches what openai see’s from the project and o3 models.

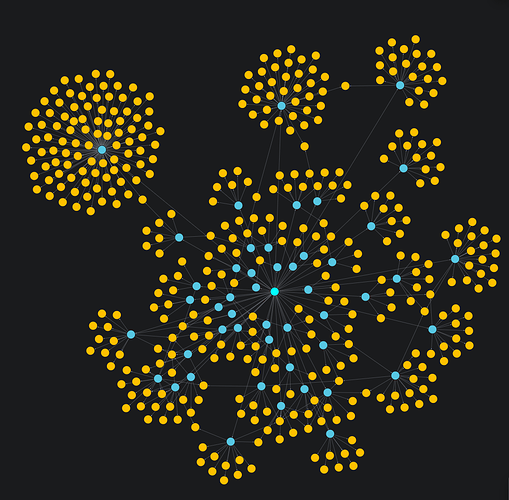

The system is designed to get smarter over time with each interactions learning and tuning itself automatically along the way well it learns. The model is designed around Neo4j and does not require training but does require a LLM with enough knowledge in it for base understanding. The rest you can just teach it on what you need it to know for domain specific discussions and it uses it knowledge and memory ai’s to understand through its stacks which simulates an automaton based thinking system.