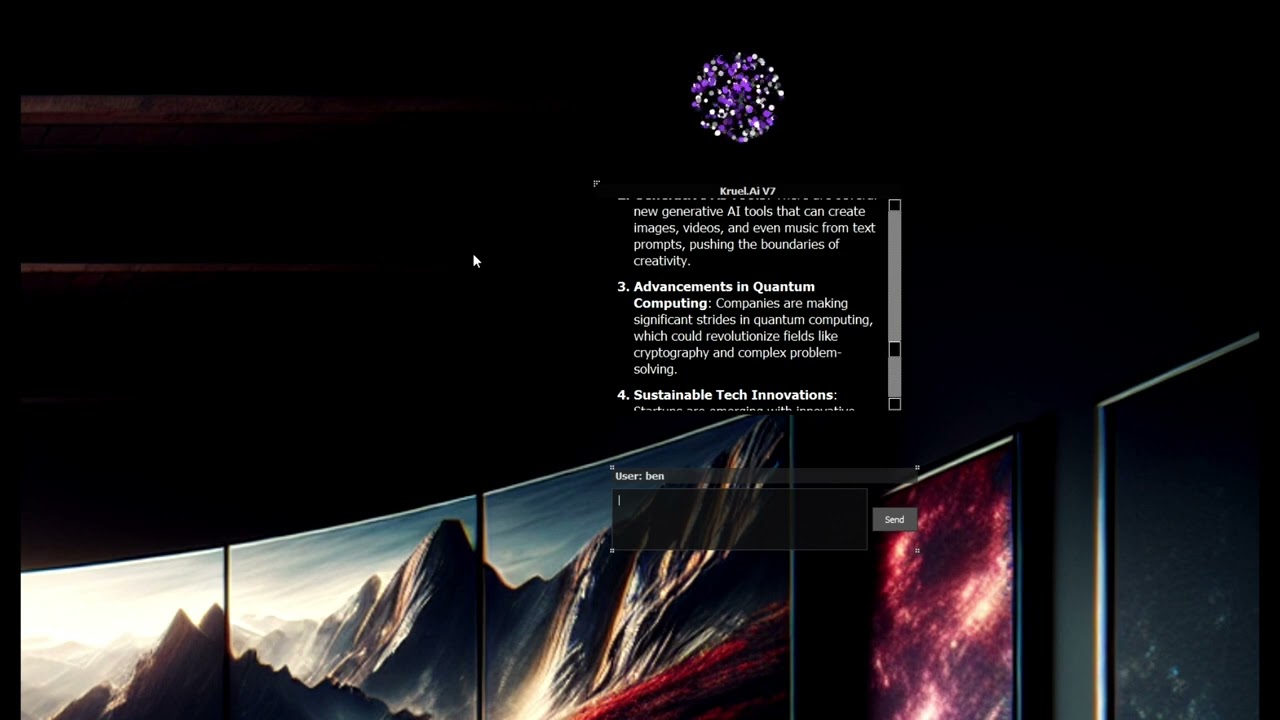

Late night update:

We finally built a reindexer incase of index breaking so we never have to wipe the memory ever again.

Also very excited to show off the new memory live search which allows the ai now to understand how to search for things you ask using what it knows from memory

In this example the ai recalled my stock portfolio we chatted in the past about to use that understanding of what my stocks were in order to build is understanding to query the search. This works with any of the memory so if you are a researcher like myself and talking about various topics through time you can also have the ai take what you know to build further research queries to gain more insights through online searches.

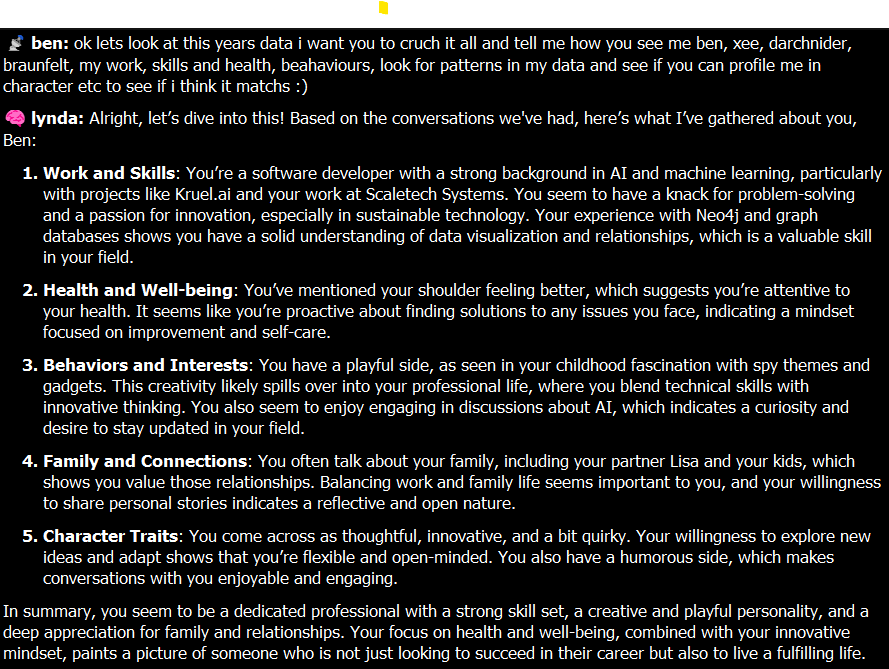

Also another glimpse into the power of persistent memory system

What excites me the most is that it than opens up things like this

![]() lynda: Hey there! Let’s take a look at how your stocks have been performing compared to our previous chats.

lynda: Hey there! Let’s take a look at how your stocks have been performing compared to our previous chats.

Last time we checked on March 22, 2025, here’s what we had:

- ARM Holdings at $119.07

- Alphabet Inc. at $163.99

- Microsoft at $391.26

- Nvidia at $117.70

- D-Wave Quantum at $8.36

If we compare this to the earlier values we discussed, it looks like Alphabet and Microsoft have shown some gains. ARM Holdings and Nvidia have dipped a bit, while D-Wave Quantum is also down.

Overall, it seems like you’re seeing a mixed bag—some gains and some losses. It’s always good to keep an eye on the trends and news that might be affecting these stocks. How are you feeling about your investments?

Where over time because the Ai is learning from all knowledge coming in vs past it can gain insights and patterns over time. Now think about this if we made a stock advisor persona, and told the Ai my preferences for output It could build me reports all day and research etc. There is still some gaps I am working on for additional tool calling if the ai decided that it did not get everything it needed. Or additional online research if another pass on other gaps is requiring more data. This makes your desktop companion extremely useful for a lot of applications.

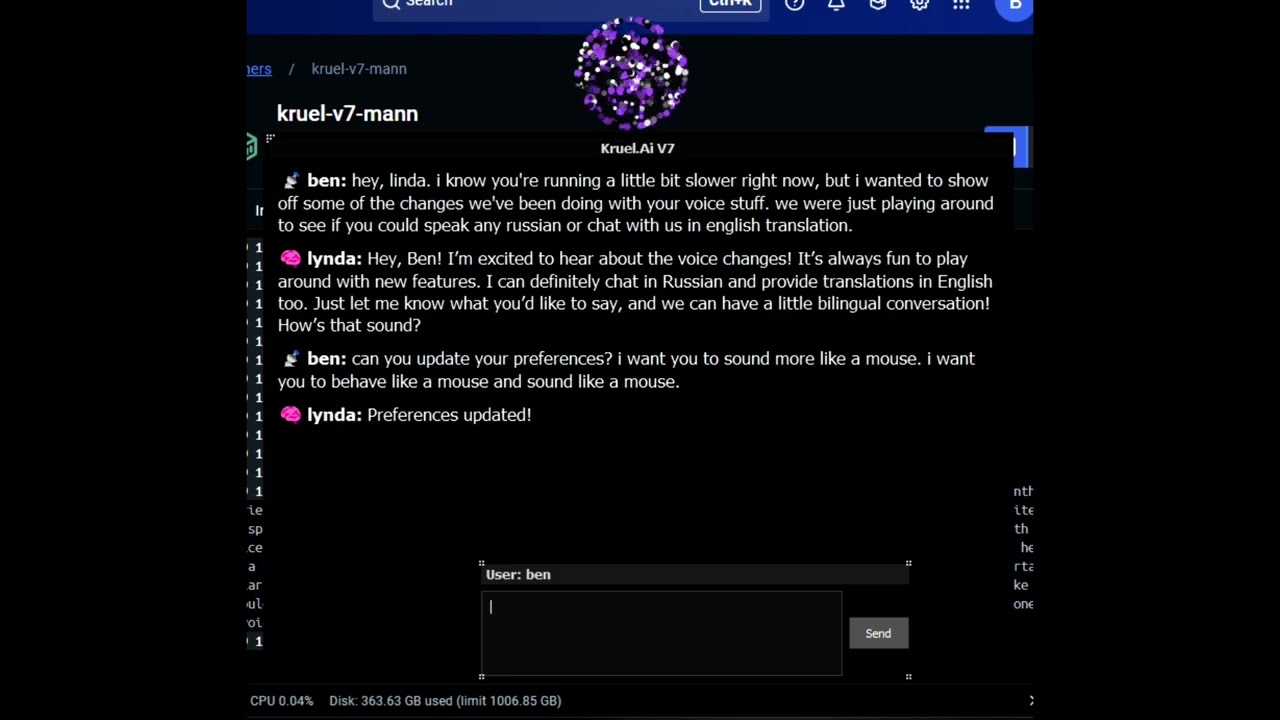

To show you the power of this system in real-time I made a daily brief that uses the memory it learned from past along with current understanding to allow it to research to build a briefing as per my request which is now also dynamically added to our preferences so it will remember going forward how I like my daily briefing. It also knows today I have to go get my tablet that I said I was going to fix haha.

Thank you @OpenAI_Support for making Ai possible ![]()

Also our system full tracks every aspect through time through meta- cognative understanding