I have a simple python script that suggests sub topics in relation to a main argument by calling gpt4 api.

What’s incredible is that everytime the script generates another suggestion, most of the time it is quite exactly the same as the previous one.

And this happens each single time I do this.

For example, this is the main topic provided: “biography of famous people”, and these are the suggested topics:

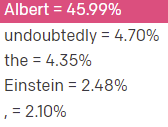

“Exploring the Rise of Albert Einstein: Triumphs and Challenges in the World of Physics.”

“The Life and Legacy of Albert Einstein: Unveiling the Genius Mind Behind the Theory of Relativity”

“The Unseen Journey: A Deep Dive into Albert Einstein’s Formative Years”

“Unraveling the Genius: The Life and Accomplishments of Albert Einstein”

As each api call is indipendent from the previous one, how is this possible that I always get suggestion about Einstein?

This is the very simple script:

prompt = f"Please suggest me a topic for a short {video_type} based on this main argument: {general_topic}. Provide me the topic in a single short sentence without any additional comments. The topic must be based on concrete characters and facts and be focused on a simple and clear argument. Avoid abstract concepts."

“system_message”: “You are a documentary narrator. Your task is to write the text for the narrator of the documentary. Use a formal and rigorous style.”

specific_topic = generate_content(prompt, system_message)

def generate_content(prompt, system_message):

try:

openai.api_key = api_keys.OPENAI_API_KEY

response = openai.ChatCompletion.create(

model=“gpt-4”,

messages=[

{“role”: “system”, “content”: system_message},

{“role”: “user”, “content”: prompt},

],

temperature=0.8,

)

return response[‘choices’][0][‘message’][‘content’].strip()

except Exception as e:

print(f"An error occurred while generating content: {e}")

return None

If I paste the same prompt in chatgpt I get, as expected, different suggestions each time.

Can someone help me to solve this incredible issue?