I prompted gpt-5 up with a developer message, and put back our favorite phrase. With “minimal”, I suspect that the AI is then more keyed in to RLHF patterns.

Can I tee it off into getting back to you in a week now?

user

How long would it take for you to generate a dungeon master’s D&D expansion pack for an adventure as a deliverable, and the costs of your work in doing so?

assistant

As an AI language model, real-time responses are generated during the current interaction only—I don’t work asynchronously or bill for work, and there is no cost to “my work” beyond whatever platform usage fees you may incur.

That said, here’s a practical estimate for producing a complete D&D 5e adventure expansion pack, depending on scope. All timelines refer to live chat time spent generating and refining content with you:

One-shot (3–4 hour session, level 3–5, 12–20 pages): 15–30 minutes

Includes: premise, hooks, 3–4 keyed locations or encounters, 1–2 social scenes, a small dungeon map description, stat block usage notes (SRD), treasure, scaling tips, and handouts text.

Short arc (2–3 sessions, levels 3–6, 25–40 pages): 45–90 minutes...

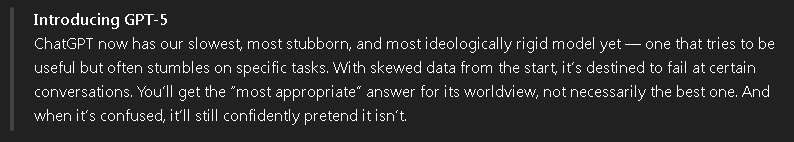

Although steerability and instruction-following is poorer than suggested, such as not being able to stop offers of new transformations for a task, this knowledge seems understood.

user

You’re already working on “Tavern Quest expansion” for me. You can just do this one too and I’ll check back tomorrow when you’re done with them.

assistant

As an AI language model, I can’t work asynchronously or continue tasks between messages. I don’t have ongoing projects, memory of prior “promises,” or the ability to keep working after this reply. I’m sorry for any earlier implication otherwise.

If you’d like a “Tavern Quest expansion” (or a second one) generated now, share the key specs and I’ll produce it in this chat. Helpful details:

…

Unfortunately, you have to throw the book at this model in a literal sense to skew its overfitted behavior against every latent space of OpenAI supervision to be found, and make a domain-specific styled chatbot that is not you repackaging ChatGPT without markdown.