will wait a couple of days - if it does not get better, will cancel my 200USD subs.

We are using GPT-5 via Completions API and it is very slow, one request to the model takes 20-50 seconds with a moderate input context.

This is a Topic about the API not ChatGPT

There is no sub for the API.

We are experiencing similar issues. Response times were brutal, after switching our API from gpt-4o to gpt-5. I expected some delay due to the model differences, but it is sadly too extreme. We also noticed a degradation in response quality, which was odd, but we admittedly need to reformat our underlying data we feed gpt through the API. Ultimately we had to revert back to gpt-4o for the time being.

Agree. I want 4o back. 5 is so slow and shallow.

Important to note that GPT-5 Pro is not meant to be fast, in fact it’s designed to be as slow and tedious as possible.

Additionally, changing “reasoning” to minimal for API calls speeds things up significantly.

Also don’t forget that everyone on planet earth is checking if it works on their evaluations right now.

It’s a joke. I’m going back to GPT-4. So much for progress Altman. Simple questions are taking forever. I guess I’ll either go back to 4 or start using Grock and cancel my sub for GPT.

4o is still available. It is not deprecated.

I believe many of these folks are here for ChatGPT, not the API.

This Topic is clearly marked API

ChatGPT users should discuss any issues elsewhere as it is off Topic.

It is clear the issue for Gpt 5 relates to the Responses API because with Chat Completions there does not appear to be a problem.

Maybe GPT 5 is good for certain use cases (reasoning and all), but it won’t work for applications where faster responses are needed.

My understanding is that GPT 4o and 4.1 etc. will be deprecated/retired and GPT-5 will be the only option even in the API. Hopefully this is not the case but if it is then the performance issues should be fixed. I haven’t yet had a chance to try with “minimal reasoning effort” but someone else reported above that it did not help much.

I would be really surprised and disappointed if that did happen.

It does not make sense to me to switch off 4.1 which clearly offers several unique things:

- more control over attributes

- raw LLMness

- guaranteed no reasoning overhead

- over double the input context

There is still a market for people who prefer predictable performance and pricing at the same time as basic LLM behaviour (even with some of the downsides).

Not everyone needs a model that takes ages and places the decision for iterated token burn with the supplier.

If that were to happen I would definitely imagine clients going elsewhere or looking at home grown solutions.

Deprecations page would document API shutdown plans, of which there is nothing new.

Next on the chopping block: o1-mini - shutdown October 27.

gpt-5 is a reasoning model, and when not using “minimal effort”, should be compared to its companions in that category, o3 and o4-mini. Plus, dedicating more compute per token to deliver quality is a good thing. The real comparison is against competitors’.

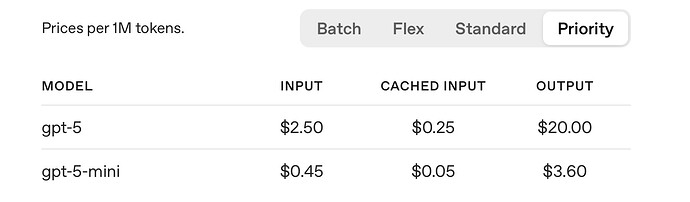

For those with SLA and enterprise to employ “priority”, we can see expectations:

GPT-5

99% > 50 tokens per second

GPT-5 mini

excludes long context1

99% > 80 tokens per secondo3

99% > 80 tokens per second

o4-mini

99% > 90 tokens per second

I mean it only works for short prompts not long ones at the moment. It did work earlier literally

API models don’t have memory that you don’t provide yourself. This is not a ChatGPT bug-reporting forum.

I’m going start flagging “off-topic” on people that can’t read the “API” category and continued reminders of what we discuss here: solutions for API AI product developers using the pay-per-use API. Not consumer offerings.

Have been using 4.1 to translate Academic Articles to English for some time. I do this by looping each page in the PDF and send an HTTP request for each page (as a base64 image format). Took some time depending on the amount of OCR has to be done.

Testing gpt-5 today and have noticed significant performance degradation, however quality of output is good.

I have some other batch workloads, and impressed with the quality of outputs, but definitely taking longer to return a response. Again these are in batch, so the only impact to me is the run cost of my serverless functions and potential for timeouts.

I don’t have workloads that respond immediately to user-input, but if I did, I would consider sticking with 4.1 for the time being.

They should have called it o4 then, they messed with the names again. Now we have to trick a reasoner into not reasoning with prompting and parameters, incredible

This is not a Topic about ChatGPT. That’s why it is in the API Category. Please discuss ChatGPT elsewhere.

You may have valid concerns about ChatGPT but you are Off-Topic here. Please stop adding noise to a different discussion.

i asked him to reply faster even if the answer will be less deep in quality

Do you want me to switch to this “fast-first” mode right away?

i said yes, we will see