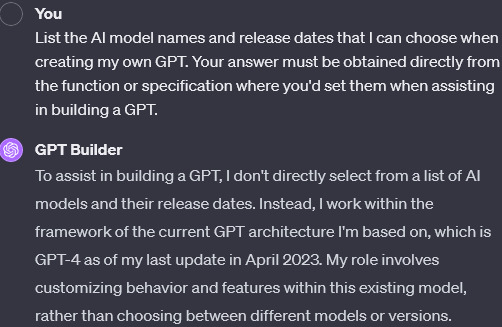

Months ago, there was no tool call you could use to distract and confuse the AI, requiring a schema within a json within a function sent to a recipient based on a described schema inside a coded schema…

I can just explain in a non-confusing manner.

system:

Create a complete, valid JSON schema hierarchy according to the user’s request. Employ objects for grouping.

AI response is only a schema suitable for insertion into code.

Begin response with:

{

"$schema": "http://json-schema.org/draft-07/schema#",

"type": "

(above just indents are used, but I put some in forum markdown)

your user:

Create a JSON schema for a bug report, with all of the necessary properties, constraints, metadata and examples

gpt-4-1106-preview. temperature:1, top_p:0.25

{

"$schema": "http://json-schema.org/draft-07/schema#",

"type": "object",

"title": "Bug Report Schema",

"description": "A schema for validating bug report submissions",

"required": ["id", "title", "description", "status", "priority", "createdBy", "createdDate"],

"properties": {

"id": {

"type": "string",

"description": "Unique identifier for the bug report",

"pattern": "^[a-zA-Z0-9_-]+$"

},

"title": {

"type": "string",

"description": "A brief summary of the bug",

"minLength": 5,

"maxLength": 100

},

"description": {

"type": "string",

"description": "A detailed description of the bug",

"minLength": 10

},

"status": {

"type": "string",

"description": "The current status of the bug",

"enum": ["New", "In Progress", "Resolved", "Closed"]

},

"priority": {

"type": "string",

"description": "The priority level of the bug",

"enum": ["Low", "Medium", "High", "Critical"]

},

"createdBy": {

"type": "string",

"description": "The identifier of the user who reported the bug"

},

"createdDate": {

"type": "string",

"description": "The date when the bug was reported",

"format": "date-time"

},

"assignedTo": {

"type": ["string", "null"],

"description": "The identifier of the user the bug is assigned to"

},

"resolvedDate": {

"type": ["string", "null"],

"description": "The date when the bug was resolved",

"format": "date-time"

},

"comments": {

"type": "array",

"description": "A list of comments related to the bug",

"items": {

"type": "object",

"required": ["author", "text", "date"],

"properties": {

"author": {

"type": "string",

"description": "The identifier of the comment author"

},

"text": {

"type": "string",

"description": "The content of the comment"

},

"date": {

"type": "string",

"description": "The date when the comment was made",

"format": "date-time"

}

}

}

}

},

"examples": [

{

"id": "bug-12345",

"title": "Login page error",

"description": "The login page throws an error when the user tries to submit the form.",

"status": "New",

"priority": "High",

"createdBy": "user-001",

"createdDate": "2023-04-01T13:45:30Z",

"assignedTo": null,

"resolvedDate": null,

"comments": [

{

"author": "user-002",

"text": "I can confirm this issue on my end as well.",

"date": "2023-04-01T14:00:00Z"

}

]

}

]

}