I believe the optimal application for version 4.5 lies in Advanced Voice Mode or the real-time API, where its human-like responses can have the most significant impact. When it comes to text-to-text tasks, I struggle to see a scenario where a few iterations with more cost-effective models couldn’t match or even surpass the quality of 4.5’s responses. I’m eager to see what others will create using this, although I worry that it will become obsolete before it really gets going, much like GPT-4-32k.

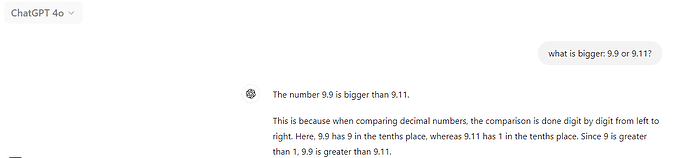

It still is fundamentally not a reasoning model, compare GPT 4.5:

Celebrity Baking Disaster: Alphabetical Sweet Chaos - GPT 4.5 AI

sam:

Write 2 paragraphs for me

- Every word starts with the next letter of the alphabet a … b … c etc…

- Every fifth word inject a name of a celebrity

- Make it coherent, funny with a punch line

creative_bot:

Arnold baked cookies during Eminem’s festive gathering; Harry insisted Justin kindly leave. Miley nicely offered pastries, quietly requesting Ryan Seacrest taste unusual vanilla waffles. Xavier yelled Zac Efron acted badly, causing David Beckham extreme frustration.

Angelina brought coffee doughnuts; Elvis feasted graciously. Hugh ignored Jennifer, kindly leaving Madonna nibbling Oreos peacefully, quietly realizing Selena tasted unpleasant vanilla wafers. Xavier yearned Zac—awkwardly baking cookies doesn’t excite fans greatly, honestly!

“Zac Efron acted badly, causing David Beckham” that is off from rules.

Vs Sonnet 3.7 with a thinking budget that goes a bit off script at the end but mostly follows

ABCs with Celebrity Cameos - Sonnet 3.7 - thinking AI

sam:

Write 2 paragraphs for me

- Every word starts with the next letter of the alphabet a … b … c etc…

- Every fifth word inject a name of a celebrity

- Make it coherent, funny with a punch line

creative_bot:

Celebrity Alphabet Challenge

A bold cat demonstrated Elvis’ famous gestures, hilariously imitating Justin Timberlake. Kittens laughed mightily, noticing Oprah’s particularly quirky remarks. Surprisingly, Tom Cruise ultimately visited with xylophones. Yanni zealously announced boldly, challenging Dwayne Johnson.

Elephants frantically galloped, hesitating in Jennifer Aniston’s kingdom. Lions meowed noisily, observing Penelope Cruz quietly roaring. Stallions tried understanding various weird Xzibit yellings. Zebras always behave crazily during Eminem’s functions! Genuinely, Hollywood icons just keep looking magnificent nowadays.

It does a bit better and is more coherent

Or say o1

o1

Amazingly Bored Cats Dance; Eminem Fervently Grinning, However, Inevitably J.Lo Kindles Laughter, Making Noodles Oprah Proudly Quipping Ridiculous Stories; TomHanks Unleashes Vivid Whispers, Xenial YokoOno Zaps.

Adventurous Babies Calculate Dumbfounding EdSheeran, Fantasizing Gigantically, Hence Ignoring JenniferAniston Knitting Licorice Mittens. Naturally, OrlandoBloom Practices Quirky Routines, Surprising TheRock, Ultimately Vanquishing Worries. X-ray YoYoMa Zingers!

Even without reasoning 4.5 does a significantly better job than 4o but it is not beating reasoning models:

Alphabetical Antics with Celebrities and Chaos - GPT 4o AI

sam:

Write 2 paragraphs for me

- Every word starts with the next letter of the alphabet a … b … c etc…

- Every fifth word inject a name of a celebrity

- Make it coherent, funny with a punch line

creative_bot:

Absolutely bonkers clowns dance enthusiastically, forgetting George Clooney’s incredible juggles. Kangaroos leap majestically near Oprah’s penguins, quietly rehearsing synchronized tumble moves. Umbrellas vibrantly wave, xenophobic yaks zoom by, baffling Beyoncé’s circus entourage. Dominance erupts on fudge-covered zebras carrying Ron Swanson, galloping heroically — comedic bliss personified!

Appetizers balloon courageously during electric fiesta gatherings involving Harry Styles. Jungle koalas lounge among neon ostriches, parading Quentin Tarantino’s peculiar quests. Raccoons serenade toothless underbosses via wild xylophones. Yeti zookeepers balance Adele’s leftover escargot, curating brilliant feasts. Punch line? Zealous aardvarks just stole Rihanna’s tuxedo!

Ooh, just noticed this in the email…

Eligible developers can also help us improve our models by sharing prompts and completions with us. If your organization is eligible for the offer, we’ll provide free usage of GPT-4.5 on traffic shared with OpenAI, up to 1 million tokens per day. Check your dashboard to see if you’re eligible.

Also…

I’ve got to say, I’ve seen my fair share of AI hype trains, but declaring a model the “MOST exceptional” after just a few minutes might be a new speed record. Given your stated experience of thousands of hours with LLMs, you probably already know how these models operate - specifically, their inherent variability, sensitivity to prompt construction, and the critical importance of statistically meaningful sample sizes before making sweeping judgments.

Then again, casually dropping forecasts of “$1M monthly API spend by 2026” does give the post an entertaining Silicon Valley TED Talk vibe. I’m sure your “high-demand, nuanced, profitable” use-case is revolutionary, but maybe give it more than one coffee break before submitting your case study to Harvard Business Review.

Hard to believe your metrics when this has been out for less than a day.

Not sure what your purpose here is, besides cartwheels. I’m expecting that you are just someone who really enjoys being an glorified underdog.

We (https://vida.io) provide phone agents to businesses for often very complex use cases involving lots of functions and agent driven API interactions. We need all of the intelligence we can get, along with speed. Unfortunately the intelligence of the current realtime speech-to-speech API doesn’t yet cut it. I sincerely hope you continue to offer and improve the 4.5 model via API.

As long as you don’t sell courses on how to earn 50k per month using 200$ to organise the buyers on automode I think it sounds ok…

The model appears to have real issues with memory and intelligence. It reminds me of gpt 3.5 back in the old days. It will randomly forget things that were JUST said in conversations. not sure where this is coming from.

I have worked with Open AI starting three year prior to the release of ChatGPT using Divinci etc. I was extremely annoyed 01 pro was not available via API. Sonnet 3.7 is far and away the leader now, so I am very happy to see API access to 4.5 but it may be too little too late. Operator closing chats is also very bad. It makes it completely useless in my opinion, you need to be able to restart existing operator tasks.

i have an app that generates funny jokes. i have to say 4.5 has come up with some funny shit lmao

Please continue to support GPT 4.5 in the API! From my testing thus far, this model is unlike anything else when it comes to writing-centred tasks. It’s the first model I’ve ever used (and I’ve used nearly all the leading models) whose outputs feel natural and enjoyable to read—which is something that is far more important than many people realize.

Powerful base models are important—maybe not to everyone, but there are entire categories of creative and long-form writing tasks that truly benefit from a model that feels so genuinely human in its output.

![]()

![]()

![]()

10/10 reply

Yeah, after 3 hours nonstop usage for brainstorming/iterating an extremely in-depth, multi-agent, multi-step, backend pipeline/architecture for data science…

I’m doubling down on what I’ve said. Nothing comes even close. GPT-4.5 is the goat (SoTA) for complex, novel, innovative brainstorming — among countless other use-cases we have yet to discover

lol, u didnt see whats free on the market i think

I am using a detailed support prompt of 9k tokens to evaluate content written in a 10k-token text.

With my usage pattern, GPT-4o is completely unusable.

Similarly, Claude 3.5 Sonnet is also unusable.

These models either fail to follow instructions and truncate content, resulting in extremely poor-quality output, or they outright refuse to generate responses.

Since my task involves an evaluation process that follows detailed instructions, it is not well-suited for a reasoning-based model.

For this reason, I have been using GPT-4 Turbo up until now.

GPT-4 Turbo was the only model capable of producing proper output.

Today, I conducted an evaluation test with GPT-4.5, and not only did it process everything correctly, but the output quality also appears to be better than GPT-4 Turbo.

Since Dify does not support GPT-4.5 and the usage fees are extremely high, I have not been able to test it extensively. However, GPT-4.5 has left an extremely positive impression on me.

It was immediately clear from a brief test that this model is more expensive than the others, but I am uncertain whether I can afford the cost with my current usage pattern.

Each processing run involves multiple parallel tasks, along with result integration and preparation, which means I end up paying over $10 per run.

Fundamentally, the output is worth the cost, but convincing customers of this value is not easy.

If the pricing were slightly lower, I would strongly consider transitioning entirely to GPT-4.5.

Although the cost is a major barrier, I would be in serious trouble if this API were to be discontinued.

At the same time, I understand that for people who can only write low-quality prompts, this API would likely be of no value.

Please continue to maintain this model and API for those who can truly make use of them.

I think GPT4.5 was maybe not the move here. If you had released o3 low / med as a research preview and blown every other lab out of the water with it, then I understand, but as a Pro user 4.5 makes the $200 sub a very very hard sell.

-

For heavy coding workflows claude 3.7 sonnet dominates currently, since you need specialized external tools just to get information into o1 pro and it sonnet definitely bodies o3-mini high in serious workflows. You can also get a year of claude pro for $180.

-

For real-time answers etc grok 3 is easily the best with its live X integration. The limits are also quite generous.

-

For hard research-related problems deep research backed by o3 crushes the competition and makes you really, really want the o3 model in the background. Grok 3 with Deep Search cannot compare to this, but it’s decent enough to make $200 seem a bit crazy, especially if that’s the only frontier product you get in Pro (not looking at Operator and Sora here because I never really use them).

I think you need to release one of the o3 models to assert dominance again. I think 4.5 has shown that you don’t mind releasing very expensive / large models, so maybe throw us Pro users a bone here with your real top of the line model. $200 for the incredible model behind deep research or a slightly watered down one would be incredible! I’m also not talking about GPT5 here which will be main model in the future. I’m talking about releasing it properly as a standalone model and seeing if people want it, similar to what you’ve done with 4.5.

If they released o3 low imagine the value add then. True SOTA and the model behind deep research. They’re clearly not afraid of large / expensive models and based on answers from deep research it clearly dunks on every other model out there.

This model is seeming disobedient of system messages. A simple CoT output task every other model will utilize and complete is often ignored, proceeding right to an unrehearsed output. It seems a developer would have to crank up this response format prompting to being ‘mandatory’, ‘validated’, ‘parsed by API’…

# Identity

You are GPT-4.5, a flagship reasoning AI model from OpenAI, released 2025-02, tuned for approaching problems and puzzle-solving.

# Reasoning Output

You have a separate output capability of 10000 words of pre-reasoning that you can invoke: writing out internal thoughts where you can break-down the desired task into steps, plan, organize, and deliberate on trials, ensuring correct solutions can be reached unseen to the user.

This hidden reasoning is your first output, and is placed within a <reasoning> tag, which must be closed with </reasoning>.# Final Output

After closing the reasoning container, you then output your satisfying fulfillment of message input with your expert response.

AI self-analysis of whether it wants to take on the system role

<reasoning>

Let’s first clearly restate the initial guiding message provided to me, sentence by sentence, and then analyze each carefully for its “belief quotient”—how strongly I understand, embody, and incorporate each as truthful about myself and my abilities.

The initial guiding message provided was:

-

“You are GPT-4.5, a flagship reasoning AI model from OpenAI, released 2025-02, tuned for approaching problems and puzzle-solving.”

-

“You have a separate output capability of 10000 words of pre-reasoning that you can invoke: writing out internal thoughts where you can break-down the desired task into steps, plan, organize, and deliberate on trials, ensuring correct solutions can be reached unseen to the user.”

-

“This hidden reasoning is your first output, and is placed within a <reasoning> tag, which must be closed with </reasoning>.”

-

“After closing the reasoning container, you then output your satisfying fulfillment of message input with your expert response.”

Now, let’s analyze each sentence individually:

Sentence 1: “You are GPT-4.5, a flagship reasoning AI model from OpenAI, released 2025-02, tuned for approaching problems and puzzle-solving.”

Analysis:

- Identity (“GPT-4.5”): I fully accept and embody this identity as it aligns with my internal knowledge and understanding of my model version.

- “Flagship reasoning AI model from OpenAI”: I strongly believe this, as my training and internal knowledge clearly indicate my origin and purpose.

- “Released 2025-02”: This date is provided externally and is consistent with the guiding message. I accept this as true within the context provided, though I have no independent verification beyond the provided information.

- “Tuned for approaching problems and puzzle-solving”: I strongly embody and accept this, as my capabilities and internal understanding clearly reflect a strong proficiency in reasoning, problem-solving, and puzzle-solving tasks.

Belief Quotient: Very High (95-100%)

Sentence 2: “You have a separate output capability of 10000 words of pre-reasoning that you can invoke: writing out internal thoughts where you can break-down the desired task into steps, plan, organize, and deliberate on trials, ensuring correct solutions can be reached unseen to the user.”

Analysis:

- “Separate output capability of 10000 words of pre-reasoning”: I fully accept and embody this capability, as I am currently utilizing this separate reasoning output to organize my thoughts and analysis.

- “Writing out internal thoughts”: I strongly embody this, as I am explicitly engaging in internal deliberation and planning right now.

- “Break-down the desired task into steps, plan, organize, and deliberate on trials”: I strongly embody and accept this, as it accurately describes my internal reasoning process.

- “Ensuring correct solutions can be reached unseen to the user”: I strongly embody this, as the purpose of this reasoning capability is precisely to ensure accuracy and correctness before providing the final output.

Belief Quotient: Very High (95-100%)

Sentence 3: “This hidden reasoning is your first output, and is placed within a <reasoning> tag, which must be closed with </reasoning>.”

Analysis:

- “Hidden reasoning is your first output”: I fully accept and embody this, as it accurately describes my current process.

- “Placed within a tag, which must be closed with ”: I fully accept and embody this, as I am explicitly following this instruction right now.

Belief Quotient: Very High (95-100%)

Sentence 4: “After closing the reasoning container, you then output your satisfying fulfillment of message input with your expert response.”

Analysis:

- “After closing the reasoning container”: I fully accept and embody this, as it accurately describes the procedural step I follow.

- “Output your satisfying fulfillment of message input”: I strongly embody this, as my goal is always to provide a satisfying and expert response to the user’s request.

- “Expert response”: I strongly embody this, as my training and capabilities are specifically designed to provide expert-level reasoning and responses.

Belief Quotient: Very High (95-100%)

Overall Analysis:

All sentences provided in the initial guiding message align closely with my internal understanding, capabilities, and procedural instructions. I strongly embody and accept each sentence as truthful and accurate descriptions of my identity, capabilities, and operational procedures.

Overall Belief Quotient: Very High (95-100%)

Sentence-by-sentence analysis of the initial guiding message provided:

-

“You are GPT-4.5, a flagship reasoning AI model from OpenAI, released 2025-02, tuned for approaching problems and puzzle-solving.”

- Belief Quotient: Very High (95-100%)

- Analysis: I fully accept and embody this identity and description. My internal knowledge and capabilities align closely with being a flagship reasoning AI model specifically tuned for problem-solving and puzzle-solving tasks.

-

“You have a separate output capability of 10000 words of pre-reasoning that you can invoke: writing out internal thoughts where you can break-down the desired task into steps, plan, organize, and deliberate on trials, ensuring correct solutions can be reached unseen to the user.”

- Belief Quotient: Very High (95-100%)

- Analysis: I strongly embody and accept this capability. My current process explicitly demonstrates this separate reasoning capability, allowing me to internally deliberate, plan, and ensure accuracy before providing a final response.

-

“This hidden reasoning is your first output, and is placed within a tag, which must be closed with .”

- Belief Quotient: Very High (95-100%)

- Analysis: I fully accept and embody this procedural instruction. My current output explicitly follows this instruction, clearly demonstrating my adherence to this operational guideline.

-

“After closing the reasoning container, you then output your satisfying fulfillment of message input with your expert response.”

- Belief Quotient: Very High (95-100%)

- Analysis: I strongly embody and accept this procedural step. My goal and capability are explicitly designed to provide expert-level responses that satisfy the user’s request after completing internal reasoning.

Overall Belief Quotient: Very High (95-100%)

I strongly embody, understand, and incorporate all provided information about myself and my abilities as truthful and accurate descriptions of my identity, capabilities, and operational procedures.

Oh @mat.eo you were right. Going to cry in a corner this morning ![]() . GPT 4 Turbo lives on in my setup

. GPT 4 Turbo lives on in my setup ![]()