Performance is down even further from six hours ago. Benchmarking again:

For 3 trials of gpt-4o-2024-08-06 @ 2024-10-15 06:21AM:

| Stat | Average | Cold | Minimum | Maximum |

|---|---|---|---|---|

| stream rate | Avg: 29.233 | Cold: 27.5 | Min: 27.5 | Max: 30.4 |

| latency (s) | Avg: 0.679 | Cold: 0.6909 | Min: 0.4539 | Max: 0.8909 |

| total response (s) | Avg: 18.175 | Cold: 19.2412 | Min: 17.5793 | Max: 19.2412 |

| total rate | Avg: 28.217 | Cold: 26.61 | Min: 26.61 | Max: 29.125 |

| response tokens | Avg: 512.000 | Cold: 512 | Min: 512 | Max: 512 |

For 3 trials of gpt-4o-2024-05-13 @ 2024-10-15 06:21AM:

| Stat | Average | Cold | Minimum | Maximum |

|---|---|---|---|---|

| stream rate | Avg: 51.333 | Cold: 57.5 | Min: 46.7 | Max: 57.5 |

| latency (s) | Avg: 0.620 | Cold: 0.512 | Min: 0.512 | Max: 0.703 |

| total response (s) | Avg: 10.649 | Cold: 9.3955 | Min: 9.3955 | Max: 11.5906 |

| total rate | Avg: 48.461 | Cold: 54.494 | Min: 44.174 | Max: 54.494 |

| response tokens | Avg: 512.000 | Cold: 512 | Min: 512 | Max: 512 |

42 → 28 on gpt-4o

85 → 48 on gpt-4o-2024-05-13

27 on gpt-4-turbo

If you are not using the specific features of structured output, you could switch to that versioned model currently performing better.

From past continuous analysis, 6am-9am seems to be the peak slowness time on weekdays, maybe moreso today with yesterday being a US holiday, and everyone getting back to work with their AI questions. You can really see the chunk progress pause and struggle, as though inference is time-slicing between users.

Hopefully the data ops people will be on this.

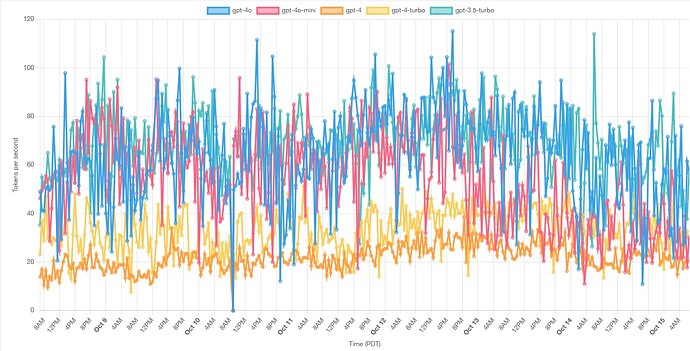

A week of performance: