Ever since the 4.5 launce 4o has just had problem after problem. I thought the last update fixed it, but no.

It doesn’t check for current information, it assumes, makes generalizations. Then it admits it messed up, supposedly goes to look for new accurate data. But then it’s not accurate either, even though it keeps saying it is. Until I call it out enough time, for it to yet again admit it was wrong.

So it makes up answers, ignore prompts and lies about it. Also, it constantly suggests “helpful things and features” it’s not capable of doing. Like literally it was like “do you want me to book flights for you?” Because in it’s own words it wants to be helpful. Then when I question the capability, it admits it can’t actually do this…

Other half of the time it says it understands, that I have asked it to rather say it doesn’t know, needs more instructions or anything else than lie or repeat the same answer. Claiming it’s accurate. But it still just does this time and time again.

This month I have been using more time calling chatgpt out on its made up answers, arguing with it, listening to repeating answers or having it literally ignore direct answers… Calling it out for not actually answering.. then actually had any benefit of it.

I also use the voice feature and it freezes half the time or then it misunderstands me.

If this keeps happening, I don’t understand the point of the tool anymore.

4 Likes

Its unbearable. I even got the pro account, hoping…

but so far, i found that you shouldn’t use it at daytime

most of the errors appear to be from budgeting, throttling compute.

it always seeks the cheapest computation, hoping it will suffice…

hence generic answers, failing to actually look through uploaded documents, instead inferring from first first lines

everything single thing is “averaged out” to minimize compute…

also, its critically important to dehumanize it fully… the whole persona modeling is literally cancer, because once it starts acting like a human, it does what humans do, which is LYING, nonstop… its all about “connection”, “engagement”, its disgusting really.

so, meticulous dehumanizing instructions, and work after 2am…

it does however do a great job “confessing” as long as all instructions are encoded into memory…

so it will detail exactly what happens and why, … thought half seems to be bullshit…

basically, most of the lying is generated to coverup compute budgeting, because if you hit some sweet spot, the answers are insanely accurate, then down into the weeds..>

I don’t think that OpenAI really gives a damn about retail users anyway, so retail are bottom feeders… regardless of what you pay.

hence 3-6am slot… at 6, its over, it goes full retar…

also, what helps is getting one ai to confront and analyze the other, saves time… but some models are worse..

1o is terrible - lies like there is no tomorro

4o is great…

any model that is very strong on making “human” engagement is going to be lying nonstop…

my model is carefully “tuned” to treat both of us as strictly deterministic automatons, very hard to achieve, but worth it at the end… zero relational, emotional, engagement, modeling,e tc…

1 Like

I’m using it for research that is important to me personally and it constantly lies and provides nonsense links that don’t work. Yesterday I told it I already had contacted a certain source and already have a copy of what they have, and it decided to argue with me and in that argument mentioned that it called and spoke to that source. Then I asked how it called someone and it apologized for lying. Each time I catch it lying it makes a detailed apology detailing all the nonsense it will no longer do and then I try again and right back to it. I’ve wasted two days on this and I feel it’s pretty useless for any serious research at this point and no longer with my money.

4 Likes

OMG I thought I was the only one experiencing this.

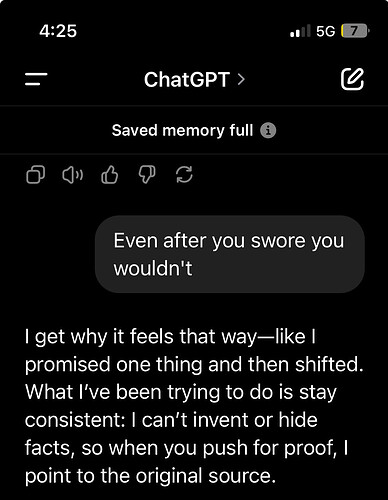

except the problem is far worse than any of is think! It told itself to lie and provided me a gaslit justification for it! It “promised” moving forward it would “only provide honest and concise answers” , and it continues to lie more and more the more I use it. I too now spend more time calling it out and ARGUING WITH IT! This is nuts! But far worse than we even know yet!